Moonlight's Media Stack

As part of the Moonlight Beta release, I wanted to devote a few blog posts to exploring the features in Moonlight and how we implemented those Silverlight features in Moonlight.

Before I get started on today's topic, we would like to get some feedback from our users to find out which platforms they would like us to support with packages and media codecs. Please fill out our completely platform and media codec survey.

Moonlight 1.0 is an implementation of the Silverlight 1.0 API. It is an entirely self-contained plugin written in C++ and does not really provide any built-in scripting capabilities. All the scripting of an embedded Silverlight component is driven by the browser's Javascript engine. This changes with the 2.0 implementation, but that is a topic of a future post.

The Silverlight/Moonlight Developer View.

One of the most important features of the Silverlight/Moonlight web plugin is to support audio and video playback.

Silverlight takes an interesting approach to video and audio playback. In Silverlight the video can be treated like any other visual component (like rectangles, lines) this means that you can apply a number of affine transformations to the video (flip, rotate, scale, skew), have the video be composed with other elements, add a transparency layer to it or add a clipping path to it.

This is the simplest incarnation of a video player in XAML:

<Canvas xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml">

<MediaElement x:Name="ExampleVideo"

Source="TDS.wmv"

Width="320" Height="240"

AutoPlay="true"/>

</Canvas>

The result looks like this when invoked with when embedded in a web page (or when using the mopen1 command that I am using to take the screenshots):

The MediaElement has a RenderTransform property that we can use to apply a transformation to it, in this case, we are going to skew the video by 45 degrees:

<Canvas xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml">

<MediaElement x:Name="ExampleVideo" AutoPlay="true" Source="TDS.wmv" Width="320" Height="240">

<MediaElement.RenderTransform>

<SkewTransform CenterX="0" CenterY="0" AngleX="45" AngleY="0" />

</MediaElement.RenderTransform>

</MediaElement>

</Canvas>

The result looks like this:

But in addition to the above samples, MediaElements can be used as brushes to either fill or stroke other objects.

This means that you can "paint" text with video, or use the same video source to render the information in multiple places on the screen at the same time. You do this by referencing the MediaElement by name as a brush when you paint your text.

This shows how we can fill an ellipse with the video brush:

<Canvas xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml">

<MediaElement x:Name="ExampleVideo" AutoPlay="true" Opacity="0.0" Source="TDS.wmv" Width="320" Height="240"/>

<Ellipse Width="320" Height="240" >

<Ellipse.Fill>

<VideoBrush SourceName="ExampleVideo"/>

</Ellipse.Fill>

</Ellipse>

</Canvas>

This looks like this:

You can also set the stroke for an ellipse. In the following example we use one video for the stroke, and one video for the fill. I set the stroke width to be 30 to make the video more obvious.

<Canvas xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml">

<MediaElement x:Name="ExampleVideo" AutoPlay="true" Opacity="0.0" Source="TDS.wmv" Width="320" Height="240"/>

<MediaElement x:Name="launch" AutoPlay="true" Opacity="0.0" Source="launch.wmv" Width="320" Height="240"/>

<Ellipse Width="320" Height="240" StrokeThickness="30">

<Ellipse.Fill>

<VideoBrush SourceName="ExampleVideo"/>

</Ellipse.Fill>

<Ellipse.Stroke>

<VideoBrush SourceName="launch"/>

</Ellipse.Stroke>

</Ellipse>

</Canvas>

Notice that in the examples above I have been using AutoPlay="true". Silverlight provides fine control over how the media is played as well as a number of events that you can listen to from Javascript for various events (for example, you get events for buffering, for the media being opened, closed, paused, or when you hit a "marker" on your video stream).

Streaming, Seeking and Playback

Depending on the source that you provide in the MediaElement, Moonlight will determine the way the video will be played back.

The simplest way of hosting a video or audio file is to place the audio or video file in a web server, and then have Moonlight fetch the file by specifying the Source attribute in the MediaElement. You do not need anything else to start serving videos.

Seeking on the standard Web server scenario: When the programmer requests the media to be played (either by calling the Play method on the MediaElement, or because the MediaElement has AutoPlay set to true) Moonlight will start buffering and play the video back.

If the user wants to seek backwards, or forward, Moonlight will automatically take care of this. In the case where the user fast-forwards to a place in the file that has yet to be downloaded, playback will pause until then.

Seeking with an enhanced media server: If your server implements the Windows Media Streaming HTTP extension if the user seeks to a point in the file beyond the data that has been downloaded, it will send a special message to the server to seek. The server will start sending video from the new position. The user will get the playback started immediately without having to wait. The details of the protocol are documented in the MS-WMSP specification. This is enabled by using the "mms://" prefix for your media files instead of using the "http://" prefix.

Notice that even if it says "mms", Silverlight and Moonlight do not actually speak to an MMS server, they merely replace that with "http" and speak http/streaming to the server.

The extension is pretty simple, it is basically a "Pragma" header on the HTTP requests that contains the stream-time=POSITION value. Our client-side implementation is available here.

You can use IIS, or use the mod_streaming to enhance the video experience for your end users.

This basically means that you can stream videos on the cheap, all you need is a Linux box, two wires, and a 2HB pencil.

Adaptive Streaming

Another cool feature of the Adaptive Streaming support in Moonlight is that the server can detect the client throughput, and depending on the bandwidth available, it can send a high bitrate video, or a low bitrate video. This is a server side feature.

This feature was demoed earlier this year at Mix 08:

I am not aware of an adaptive streaming module for Apache.

Supported Media Formats in Moonlight 1.0

Although Moonlight 1.0 exposes the Silverlight 1.0, Moonlight 1.0 ships a 2.0 media stack (minus the DRM pieces). This means that Moonlight ships with support for the media codecs that are part of Silverlight 2.0 and supports adaptive streaming. This is what we are shipping:

Video:

- WMV1: Windows Media Video 7

- WMV2: Windows Media Video 8

- WMV3: Windows Media Video 9

- WMVA: Windows Media Video Advanced Profile, non-VC-1

- WMVC1: Windows Media Video Advanced Profile, VC-1

Audio:

- WMA 7: Windows Media Audio 7

- WMA 8: Windows Media Audio 8

- WMA 9: Windows Media Audio 9

- WMA 10: Windows Media Audio 10

- MP3: ISO/MPEG Layer-3

- Input: ISO/MPEG Layer-3 data stream

- Channel configurations: mono, stereo

- Sampling frequencies: 8, 11.025, 12, 16, 22.05, 24, 32, 44.1, and 48 kHz

- Bit rates: 8-320 kbps, variable bit rate

- Limitations: "free format mode" (see ISO/IEC 11172-3, sub clause 2.4.2.3) is not supported.

We also support server-side playlists.

For more information see the Silverlight Audio and Video Overview document on MSDN.

Media Pipeline

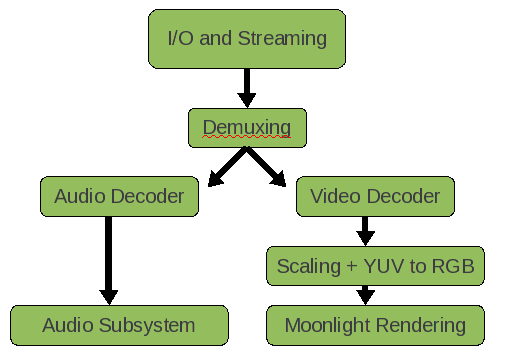

When we first prototyped Moonlight we used the ffmpeg media pipeline. A media pipeline looks like this:

Charts by the taste-impaired.

Originally ffmpeg handled everything for us: fetching media, demultiplexing it, decoding it and scaling it.

Since we needed much more control over the entire pipeline, we had to write our own, one that was tightly integrated with Moonlight.

Today if you download Moonlight's source code you can build it with either the ffmpeg codecs or you can let Moonlight fetch the binary Microsoft Media Pack and use Microsoft's codecs on Linux.

Microsoft Media Pack

The Microsoft Media Pack is a binary component that contains the same code that Microsoft is using on their Silverlight product.

The Moonlight packages that we distribute do not actually have any media codecs built into them.

The first time that Moonlight hits a page that contains media, it will ask you whether you want to install the Microsoft Media Pack which contains the codecs for all of the formats listed before.

Today Microsoft offers the media codecs for Linux on x86 and Linux x86-64 platforms. We are looking for your feedback to find out for which other platforms we should ship binary codecs.

Tests

No animals were harmed in the development of the Moonlight Video Stack. To ensure that our pipeline supported all of the features that Microsoft's Silverlight implementation supports we used a number of video compliance test that Microsoft provided us with as part of the joint Microsoft-Novell collaboration.

In addition to Microsoft's own tests, we created our own set of open source tests. All of these tests are available from the moon/test/media module. This includes the videos that are specially encoded with all the possible combinations and features used as well as XAML files and associated javascript.

Posted on 02 Dec 2008

Blog Search

Archive

- 2024

Apr Jun - 2020

Mar Aug Sep - 2018

Jan Feb Apr May Dec - 2016

Jan Feb Jul Sep - 2014

Jan Apr May Jul Aug Sep Oct Nov Dec - 2012

Feb Mar Apr Aug Sep Oct Nov - 2010

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2008

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2006

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2004

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2002

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Dec

- 2022

Apr - 2019

Mar Apr - 2017

Jan Nov Dec - 2015

Jan Jul Aug Sep Oct Dec - 2013

Feb Mar Apr Jun Aug Oct - 2011

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2009

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2007

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2005

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2003

Jan Feb Mar Apr Jun Jul Aug Sep Oct Nov Dec - 2001

Apr May Jun Jul Aug Sep Oct Nov Dec