We did it!

Today Novell announced in Paris that Peugeot Citroën will be deploying 20,000 Linux desktops and 2,500 Linux servers.

These are very important news. Linux on the desktop has got good traction with governments, but it is just great to see the open source desktop being chosen for commercial deployments of this size.

Congratulations to the team!

I know that folks have been working really hard for the past few months to make sure that our server and desktop offerings were solid and that they meet the needs of a large organization.

Congrats to Anna's team for all their hard work in doing the usability studies that made the desktop so much better, and all the desktop hackers that worked on making all those features happen.

For those of you considering a migration to Vista, you might want to see Novell's Compare to Vista web site.

Btw, I think someone should do a "We did it" animation like the one that shows up in Stephen Colbert's Colbert Report, as it captures the emotion of making this deal happen.

Posted on 30 Jan 2007

The EU Prosecutors are Wrong.

The file format wars between Open Document Format (ODF) file format against the Office Open XML (OOXML) are getting heated.

There are multiple discussions taking place and I have been ignoring it for the most part.

This morning I read on News.com an interview with Thomas Vinje. Thomas is part of the legal team representing some companies in the EU against Microsoft.

The bit in the interview that caught my attention was the following quote:

We filed our complaint with the Commission last February, over Microsoft's refusal to disclose their Office file formats (.doc, .xls, and .ppt), so it could be fully compatible and interoperable with others' software, like Linux. We also had concerns with their collaboration software with XP, e-mail software, and OS server software and some media server software with their existing products. They use their vast resources to delay things as long as possible and to wear people down so they'll give up.

And in July, we updated our complaint to reflect our concerns with Microsoft's "open XML." (Microsoft's Office Open XML is a default document format for its Office 2007 suite.) And last month, we supplemented that information with concerns we had for .Net 3.0 (software designed to allow Vista applications to run on XP and older-generation operating systems).

And I think that the group is not only shooting themselves in the foot, they are shooting all of our collective open source feet.

I'll explain.

Open Source and Open Standards

For a few years, those of us advocating open source software have found an interesting niche to push open source: the government niche.

The argument goes along these lines: Open Office is just as good as Microsoft Office; Open Office is open source, so it comes for free; You can actually nurture your economy if you push for a local open source software industry.

The argument makes perfect sense, most people will agree to it, but every once in a while our advocacy has faced some problems: Microsoft Office might have some features that we do not have, the cost of migration is not zero, existing licensing deals sweeten the spot, and there are compatibility corner cases that slow down the adoption.

A new powerful argument was identified a few years back, when Congressman Edgar Villanueva in 2002 replied to a letter from Microsoft's Peru General Manager.

One of the key components at the time was that the government would provide free access to government information and the permanence of public data. The letter those two points said:

To guarantee the free access of citizens to public information, it is indispensable that the encoding of data is not tied to a single provider. The use of standard and open formats gives a guarantee of this free access, if necessary through the creation of compatible free software.To guarantee the permanence of public data, it is necessary that the usability and maintenance of the software does not depend on the goodwill of the suppliers, or on the monopoly conditions imposed by them. For this reason the State needs systems the development of which can be guaranteed due to the availability of the source code.

The letter is a great document, but the bit that am interested in is the bit about open standards.

Using Open Standards to Promote Open Source

Open standards and the need for public access to information was a strong message. This became a key component of promoting open office, and open source software. This posed two problems:

First, those promoting open standards did not stress the importance of having a fully open source implementation of an office suite.

Second, it assumed that Microsoft would stand still and would not react to this new change in the market.

And that is where the strategy to promote the open source office suite is running into problems. Microsoft did not stand still. It reacted to this new requirement by creating a file format of its own, the OOXML.

Technical Merits of OOXML and ODF

Unlike the XML Schema vs Relax NG discussion where the advantages of one system over the other are very clear, the quality differences between the OOXML and ODF markup are hard to articulate.

The high-level comparisons so far have focused on tiny details (encoding, model used for the XML). There is nothing fundamentally better or worse in those standards like there is between XML Schema and Relax NG.

ODF grew out of OpenOffice.org and is influenced by its internal design. OOXML grew out of Microsoft Office and it is influenced by its internal design. No real surprises there.

The Size of OOXML

A common objection to OOXML is that the specification is "too big", that 6,000 pages is a bit too much for a specification and that this would prevent third parties from implementing support for the standard.

Considering that for years we, the open source community, have been trying to extract as much information about protocols and file formats from Microsoft, this is actually a good thing.

For example, many years ago, when I was working on Gnumeric, one of the issues that we ran into was that the actual descriptions for functions and formulas in Excel was not entirely accurate from the public books you could buy.

OOXML devotes 324 pages of the standard to document the formulas and functions.

The original submission to the ECMA TC45 working group did not have any of this information. Jody Goldberg and Michael Meeks that represented Novell at the TC45 requested the information and it eventually made it into the standards. I consider this a win, and I consider those 324 extra pages a win for everyone (almost half the size of the ODF standard).

Depending on how you count, ODF has 4 to 10 pages devoted to it. There is no way you could build a spreadsheet software based on this specification.

To build a spreadsheet program based on ODF you would have to resort to an existing implementation source code (OpenOffice.org, Gnumeric) or you would have to resort to Microsoft's public documentation or ironically to the OOXML specification.

The ODF Alliance in their OOXML Fact Sheet conveniently ignores this issue.

I guess the fairest thing that can be said about a spec that is 6,000 pages long is that printing it out kills too many trees.

Individual Problems

There is a compilation being tracked in here, but some of the objections there show that the people writing those objections do not understand the issues involved.

Do as I say, not as I do

Some folks have been using a Wiki to keep track of the issues with OOXML. The motivation for tracking these issues seems to be politically inclined, but it manages to pack some important technical issues.

The site is worth exploring and some of the bits there are solid, but there are also some flaky cases.

Some of the objections over OOXML are based around the fact that it does not use existing ISO standards for some of the bits in it. They list 7 ISO standards that OOXML does not use: 8601 dates and times; 639 names and languages; 8632 computer graphics and metafiles; 10118-3 cryptography as well as a handful of W3C standards.

By comparison, ODF only references three ISO standards: Relax NG (OOXML also references this one), 639 (language codes) and 3166 (country codes).

Not only it is demanded that OOXML abide by more standards than ISO's own ODF does, but also that the format used for metafiles from 1999 be used. It seems like it would prevent some nice features developed in the last 8 years for no other reason than "there was a standard for it".

ODF uses SMIL and SVG, but if you save a drawing done in a spreadsheet it is not saved as SVG, it is saved using its own format (Chapter 9) and sprinkles a handful of SVG attributes to store some information (x, y, width, height).

There is an important-sounding "Ecma 376 relies on undisclosed information" section, but it is a weak case: The case is that Windows Metafiles are not specified.

It is weak because the complaint is that Windows Metafiles are not specified. It is certainly not in the standard, but the information is publicly available and is hardly "undisclosed information". I would vote to add the information to the standard.

More on the Size of the Spec

A rough breakdown of OOXML:

- ~100 page "Fundamentals" document;

- ~200 page "Packaging Conventions" document;

- ~450 page "Primer" document (a tutorial);

- ~1850 page Word Processing reference document;

- ~1090 page Spreadsheet Processing reference document;

- ~270 page Presentation Processing reference document;

- ~1140 page Drawing Processing reference document;

- ~900 pages for other references (VML, SharedML)

- ~42 future extensibility document.

I have obviously not read the entire specification, and am biased towards what I have seen in the spreadsheet angle. But considering that it is impossible to implement a spreadsheet program based on ODF, am convinced that the analysis done by those opposing OOXML is incredibly shallow, the burden is on them to prove that ODF is "enough" to implement from scratch alternative applications.

If Microsoft had produced 760 pages (the size of ODF) as the documentation for the ".doc", ".xls" and ".ppt" that lacked for example the formula specification, wouldn't people justly complain that the specification was incomplete and was useless?

I would have to agree at that point with the EU that not enough information was available to interoperate with Microsoft Office.

If anything, if I was trying to interoperate with Microsoft products, I would request more, not less.

SVG

Then there is the charge about not using SVG in OOXML. There are a few things to point out about SVG.

Referencing SVG would pull virtually every spec that the W3C has produced (Javascript, check; CSS, check; DOM, check).

This can be deceptive in terms of the "size" of the specification, but also makes it incredibly complex to support. To this date am not aware of a complete open source SVG implementation (and Gnome has been at the forefront of trying out SVG, going back to 1999).

But to make things worse, OpenOffice does not support SVG today, and interop in the SVG land leaves a lot to be desired.

Some of my friends that have had to work with SVG have complained extensively to me in the past about it. One friend said "Adobe has completely hijacked it" referring to the bloatedness of SVG and how hard it is to implement it today.

At the time of this comment, Adobe had not yet purchased Macromedia, and it seemed like Adobe was using the standards group and SVG to compete against Flash, making SVG unnecessarily complicated.

Which is why open source applications only support a subset of SVG, a sensible subset.

ISO Standarization

ODF is today an ISO standard. It spent some time in the public before it was given its stamp of approval.

There is a good case to be made for OOXML to be further fine-tuned before it becomes an ISO standard. But considering that Office 2007 has shipped, I doubt that any significant changes to the file format would be implemented in the short or medium term.

The best possible outcome in delaying the stamp of approval for OOXML would be to get further clarifications on the standard. Delaying it on the grounds of technical limitations is not going to help much.

Considering that ODF spent a year receiving public scrutiny and it has holes the size of the Gulf of Mexico, it seems that the call for delaying its adoption is politically based and not technically based.

XAML and .NET 3.0

From another press release from the group:

"Vista is the first step in Microsoft‘s strategy to extend its market dominance to the Internet," Awde stressed. For example, Microsoft's "XAML" markup language, positioned to replace HTML (the current industry standard for publishing language on the Internet), is designed from the ground up to be dependent on Windows, and thus is not cross-platform by nature....

"With XAML and OOXML Microsoft seeks to impose its own Windows-dependent standards and displace existing open cross-platform standards which have wide industry acceptance, permit open competition and promote competition-driven innovation. The end result will be the continued absence of any real consumer choice, years of waiting for Microsoft to improve - or even debug - its monopoly products, and of course high prices," said Thomas Vinje, counsel to ECIS and spokesman on the issue.

He is correct that XAML/WPF will likely be adopted by many developers and probably some developers will pick it over HTML development.

I would support and applaud his efforts to require the licensing of the XAML/WPF specification under the Microsoft Open Specification Promise.

But he is wrong about XAML/WPF being inherently tied to Windows. XAML/WPF are large bodies of code, but they expose fewer dependencies on the underlying operating system than .NET 2.0's Windows.Forms API does. It is within our reach to bring to Linux and MacOS.

We should be able to compete on technical grounds with Microsoft's offerings. Developers interested in bringing XAML/WPF can join the Mono project, we have some bits and pieces implemented as part of our Olive sub project.

I do not know how fast the adoption of XAML/WPF will be, considering that unlike previous iterations of .NET, gradual adoption of WPF is not possible. Unlike .NET 2.0 which was an incremental upgrade for developers, XAML/WPF requires software to be rewritten to take advantage of it.

The Real Problem

The real challenge today that open source faces in the office space is that some administrations might choose to move from the binary office formats to the OOXML formats and that "open standards" will not play a role in promoting OpenOffice.org nor open source.

What is worse is that even if people manage to stop OOXML from becoming an ISO standard it will be an ephemeral victory.

We need to recognize that this is the problem. Instead of trying to bury OOXML, which amounts to covering the sun with your finger.

We need to make sure that OpenOffice.org can thrive on its technical grounds.

In Closing

This is not a complete study of the problems that OOXML has, as I said, it has its share of issues. But it has its share of issues just like the current ODF standard has.

To make ODF successful, we need to make OpenOffice.org a better product, and we need to keep improving it. It is very easy to nitpick a standard, specially one that is as big as OOXML. But it is a lot harder to actually improve OpenOffice.org.

If everyone complaining about OOXML was actually hacking on improving OpenOffice.org to make it a technically superior product in every sense we would not have to resort, as a community, to play a political case on weak grounds.

I also plan on updating this blog entry as people correct me (unlike ODF, my blog entries actually contain mistakes ;-)

Updates -- February 1st

There are a few extra points that I was made aware of after I posted this blog entry.

Standard Size

Christian Stefan wrote me to point out that the OOXML specification published by ECMA uses 1.5 line spacing, while OASIS uses single spacing. I quote from his message:

ODF 722 pages

SVG 719

MathML 665

XForms 152 (converted from html using winword, ymmv)

XLink 36 (converted from html using winword, ymmv)

SMIL 537 (converted from html using winword, ymmv)

OpenFormula 371

----

3,202

Now I'm still missing some standards that would add severall hundred

pages and changing line spacing to 1.5 will bring me near the 6000

pages mark I guess. This is not very surprising (at least for me)

since both standards try to solve very similar problems with nearly

equal complexity.

Review Time

The "one month to review OOXML" meme that has been going

around the net turns out to be false.

Posted on 30 Jan 2007

OpenSUSE Build System

For the last couple of years the folks at SUSE

have been building a new build system that could help us make

packages for multiple distributions from the same source code.

For the last couple of years the folks at SUSE

have been building a new build system that could help us make

packages for multiple distributions from the same source code.

Yesterday, Andreas Jaeger announced the open sourcing of the Open SUSE Build Service (the post is archived here).

The service has been in beta testing for a few months.

The Problem

One of the problems that the Linux community faces is that a binary package built in one distribution will not necessarily work on a different distribution. Distributions have adopted different file system layout configurations (from the trivial to the more complex), different versions of system libraries and configuration files are placed in different locations. In addition different revisions of the operating system will differ from release to release, so the same software will not always build cleanly.

Although there are efforts to unify and address this problem, these efforts have failed because they require all major distributions to agree on file system layout, in the adoption of a core. Without the buy-in of everyone, it is not possible to solve this problem, which has made the life of the Linux Standard Base folks incredibly frustrating.

If you are ahead of the pack, the mentality has been that you will prevail over other distributions and become the only Linux distribution so there is no need to spend extra engineering work in trying to unify distributions.

At Ximian, when we were a a small independent software vendor trying to distribute our software for Linux users (Evolution, and as a side effect the "Ximian Desktop") we had to set up internal build farms to target multiple operating systems. We created a build system (build-buddy) with "jails" which were a poor man's operating system virtualization system to build packages for all of the Linux distributions.

To this date shipping software for multiple Linux distributions remains a challenge. For instance, look at the downloads page for Mono. We are targeting various distribution of Linux there, and we know we are missing many more, but setting up multiple Linux distributions, building on every one of them, running the tests and maintaining the systems takes a lot of time.

And this problem is faced by every ISV. Folks at Mozilla and VMware have horror stories to tell about how they have to ship their products to ensure that they run on a large number of distributions.

The OpenSUSE Build System

Originally, the OpenSUSE Build System was a hosted service running at SUSE. The goal of the build system was to build packages for various distributions from the same build specifications.

Currently it can build packages for the Novell, Red Hat, Mandriva, Debian and Ubuntu (the versions supported currently are: SUSE Linux 9.3, 10.0 and 10.1, OpenSUSE 10.2, SUSE Linux Enterprise 10, Debian Etch, Fedora Core 5, 6, Mandriva 2006 and Ubuntu 6.06).

For example, Aaron is packaging Banshee for a number of platforms, you can browse the packages here.

This hosted service means that developers did not have to setup their own build farms, they could just use the build farms provided by SUSE.

With the open sourcing of the build system, third party ISVs and IT developers no longer have to use the hosted services at SUSE, they are now able to setup their own build farms inside their companies and produce packages for all of the above distributions. If you need to keep your code in-house, you can use the same functionality and setup your own build farms.

Currently those creating packages can use either a web interface or a command line tool to get packages built by the system (a nice API is available to write other front-ends; GUI front-ends are currently under development).

Testimonials

From the Scribus blog:

While we recently released 1.3.3.7 into the wild, one of the tools we used for the first time is the new OpenSuse Build Server. So why the rant ?In a word it is terrific. :) It still is in beta phase and there are features to be added. However, it is a major helper for time stretched developers to package their application in a sane way and then automagically push them to rotating mirrors worldwide without a lot of fuss.

It is all fine and well if you write the best code in the world if no one can use it. And that means packaging. For Linux, that means creating a .deb or creating rpm packages which many Linux distributions use.

The magic is one can upload the source and a spec file which is a recipe to build rpms. Select which platforms to build, push a button and voila. In an hour or two you have all your packages built in a carefully controlled manner. This allowed us to supply 1.3.3.7 for all Suse releases 9.3+ including the Enterprise Desktop 10 for the first time. Even better for users, they can use what ever tool be it Yast, Smart or Yum to add these repositories to get the latest greatest Scribus with all dependencies automatically satisfied. Push button packaging for users too :)

Installation

The resulting packages that are submitted today to the build service can be installed with a number of popular software management tools including Smart, apt, yum and yast.

We hope that this cross-distribution build system will remove the burden of picking a subset of Linux distributions to support and will make more packages available for more distributions.

A tutorial on getting started is here.

Congratulations to the OpenSUSE team for this release!

Posted on 26 Jan 2007

Monkey Magic!

Linux

Format Magazine is running a contest to get your idea

implemented in Mono. The contest goes like this:

Linux

Format Magazine is running a contest to get your idea

implemented in Mono. The contest goes like this:

Get your dream application made! In Linux Format issue 89, our Mono cover feature includes a competition called Make it with Mono, where you design your ideal piece of software. Just jot down your suggestions, and in April other users will rate them -- if your entry makes it into the top 30, you'll win a cool Mono T-shirt. And if yours is the number 1 rated entry, it will be programmed in Mono!

To see the entries submitted so far, go to: Entries Page, and you can submit your application here.

Posted on 25 Jan 2007

Microsoft's Ajax.Net License

Microsoft recently announced the release of ASP.NET AJAX, their extension to ASP.NET to spice it up with Ajax goodness (Scott Guthrie's blog is always the best source for the details).

Microsoft must be commended for picking the Microsoft Permissive License (Ms-PL) for all the of their client-side Javascript libraries and the AJAX Control Toolkit.

The Ms-PL is for all intents and purposes an open source license.

This is a diagram from the introduction to ASP.NET AJAX:

The left-hand side is what has been released under the Ms-PL. For the whole stack to work on Mono, we will have to do some work on the server-side assemblies that provide the support (the System.Web.Extensions assembly). It is likely a lot of work, but considerably less than the whole stack.

The AJAX Control Toolkit comes with 30 controls for common tasks, with a nice sampler of the controls. The Control Toolkit is actually being developed in the open, and Microsoft is integrating external contributions.

Nikhil has a retrospective on how the project came to be. A good chunk of information for those of us interested in the archaeology of software.

A big thanks to everyone at Microsoft involved in picking the Ms-PL license for these technologies.

Posted on 24 Jan 2007

SecondLife Updates

Jim has posted an update on using Mono to script SecondLife.

The challenges that they were facing were related to memory leaks that were experienced when they loaded and unloaded thousand of application domains (I gather this happens when they refresh a specific script in SecondLife).

Posted on 22 Jan 2007

Score Music in Wiki Form

Wikifonia has been launched, a Wiki to share sheet music, it is on its early stages, but it already has some content.

Posted on 22 Jan 2007

Buck Stopping and the White House

In his Open Letter to the President Ralph Nader points out that although Bush admitted "mistakes were made", he quickly moved on, and changed the subject:

You say "where mistakes have been made, the responsibility rests with me." You then quickly change the subject. Whoa now, what does it mean when you say the responsibility for mistakes rest with you?

Responsibility for "mistakes" that led to the invasion-which other prominent officials and former officials say were based on inaccurate information, deceptions, and cover-ups?

Responsibility for the condoning of torture, even after the notorious events at abu-Gharib prison were disclosed?

Responsibility for months and months of inability to equip our soldiers with body armor and vehicle armor that resulted in over 1,000 lost American lives and many disabilities?

Responsibility for the gross mismanagement over outsourcing both service and military tasks to corporations such as Haliburton that have wasted tens of billions of dollars, including billions that simply disappeared without account?

Responsibility for serious undercounting of official U.S. injuries in Iraq-because the injuries were not incurred in direct combat-so as to keep down political opposition to the war in this country?

Click to read the rest of the letter.

Posted on 21 Jan 2007

Crap in Vista, part 2

Microsoft has posted some answers to questions regarding the Content, Restriction, Annulment and Protection (or CRAP, some people euphemistically call it "Digital Rights Management") features built into Vista. I previously blogged about Peter Gutmann's explanation of the problems that it would cause Vista.

Microsoft has responded to these claims here.

The response does little to contradict Peter's findings, and merely tries to shed some positive light on things. They answer questions in the best style of Ari Fletcher; for example, when it comes to cost, they conveniently ignore the question and instead focus on the manufacturing benefits (!!!).

It is interesting to compare this with the presentation by ATI at Microsoft's Hardware conference in 2005 (WinHEC), Digital Media Content Protection which has a more honest look at the costs all throughout the stack.

The discussion at Slashdot browsed at +4 has the good stuff.

Posted on 21 Jan 2007

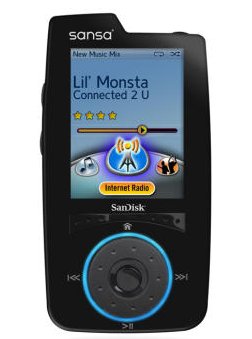

Sansa Connect Video

You can see the UI of the Sansa Connect in action in this Engadget video:

It apparently will also let you browse your Flickr (wonder if they use the Flickr.Net API) and share songs with your friends, and it seems to have some sort of last-fm like listening mode.

The details on the song sharing are not very clear, it all seems bound to the service. But I wonder if you can pass around your own mp3s.

Anyways, am buying this on the grounds that it runs Mono, and so I can finally show a physical object to the family that runs it.

Posted on 18 Jan 2007

Mono-based device wins Best-of-Show at CES

The SanDisk Sansa Connect MP3 player won the Best of Show award in the MP3 and Audio category:.

The Sansa Connect is running Linux as its operating system, and the whole application stack is built on Mono, running on an ARM processor.

There is a complete review of it at Engadget, among the features it includes:

- 4GB of memory.

- SD slot for extra storage.

- WMA, MP3 subscription WMA and PlayForSure are supported.

- Internet Radio streaming.

- WiFi.

- Photo browser.

- 2.2 TFT color screen.

The WiFi support allows users to download music from providers or their own home servers.

The Sansa Connect is designed by the great guys at Zing and it will be available to purchase in a couple of months.

Posted on 17 Jan 2007

Not a Gamer

With all the rage and discussion over the PS3 vs the Wii

and how the Wii is a breakthrough in interactivity, I decided

to get myself one of those, and auctioned my way to one on

eBay.

The last time I owned a console it was an Atari 2600, and I barely got to play with it.

Wii Sports is a very nice program, and I enjoy playing it. As reported, your arms are sore the next day after playing Wii Tennis.

I went to the local game store and bought some assorted games for the Wii, the clerk said "excellent choices sir", as if I was picking a great cheese at Formaggio Kitchen.

The graphics are amazing, but I could not stand playing any of them. The Zelda graphics are incredibly well done.

But all I could think of was the poor guy in QA that must have debugged been filing bugs against this. Man, do I feel sorry for the QA guys that do games.

But I just do not feel like solving Zelda. Am sure it must have some kind of appeal to some people, but solving puzzles in a 3D world and shooting at stuff and earning points did not sound like an interesting enough challenge. I can appreciate the cuteness of having to find the cradle in exchange for the fishing pole and shooting rocks and "zee-targeting" something.

As far as challenges go, to me, fixing bugs, or writing code is a more interesting puzzle to solve. And for pure entertainment, my blogroll and reddit provide a continuous stream of interesting topics.

Am keeping the Wii though. Playing Wii box and Wii tennis with Laura is incredibly fun (well, watching Laura play Wii Box accounts for 80% of the fun).

When I got the Wii, I told myself "If I like this, am getting the PS3 and the XBox". Well, I actually just thought about it, I do not really talk to myself.

But am obviously not a gamer.

Posted on 11 Jan 2007

Programmer Guilt

With Mono, is has often happened that I have wanted to work on a fun new feature (say, C# 3) or implement a fun new class.

But most of the time, just when am about to do something fun for a change, I think "don't we have a lot of bugs out there to fix?", so I take a look at bugzilla and try to fix them, follow up on some bugs, try to produce test cases.

By the time am done, I have no energy left for the fun hack.

I need some "guilt-free" time, where I can indulge myself into doing some work that is completely pointless. But there is a fine balance between happy-fun-fun hacking, and making sure that Mono gets a great reputation.

Posted on 11 Jan 2007

Mono and C# 3.0

Since a few folks have asked on irc and they are not using my innovative comment-reading service, am reposting (with some slight edits and clarifications) the answer to "Will Mono implement C# 3.0?"

Yes, we will be implementing C# 3.0.

We have been waiting for two reasons: first, we wanted to focus on bug fixing the existing compilers to ensure that we have a solid foundation to build upon. I very much like the Joel Test that states `Do you fix bugs before writing new code?'.

C# 3.0 is made up of about a dozen new small features. The features are very easy to implement but they rely heavily on the 2.0 foundation: iterators, anonymous methods, variable lifting and generics.

Since we have been fixing and improving the 2.0 compiler anyways, we get to implement the next draft of the specification instead of the current draft. This means that there is less code to rewrite when and if things change.

Fixing bugs first turned out to be a really important. In C# 3.0 lambda functions are built on the foundation laid out by anonymous methods. And it turned out that our anonymous method implementation even a few months ago had some very bad bugs on it. It took Martin Baulig a few months to completely fix it. I wrote about Martin's work here. The second piece is LINQ, some bits and pieces of the libraries have been implemented, those live in our Olive subproject. Alejandro Serrano and Marek Safar have contributed the core to the Query space, and Atsushi did some of the work on the XML Query libraries. We certainly could use help and contributions in that area.

Anecdote: we started on the C# 2.0 compiler about six months before C# 2.0 was actually announced at the 2003 PDC. Through ECMA we had early access to the virtual machine changes to support generics, and the language changes to C#. By the time of the PDC we had almost a functional generics compiler.

The spec was not set in stone, and it would change in subtle places for the next two years. So during the next two years we worked on and off in completing the support and implementing the changes to the language as it happened.

Most of the hard test cases came when C# 2.0 was released to the public as part of .NET 2.0. About six weeks into the release (mid-December and January) we started receiving a lot of bug reports from people that were using the new features.

Second Mini-Anecdote: Pretty much every new release of IronPython has exposed limitations in our runtime, our class libraries or our compilers. IronPython has really helped Mono become a better runtime.

Posted on 11 Jan 2007

Keith Olberman Evaluation of the Iraq War

Just minutes before the speech last night, Keith Olberman had a quick recap of the mistakes done so far.

Loved the delivery. Crooks and Liars has the video and the transcript (the video is better, as it has a moving sidebar with the summary, like Colbert's "The Word").

Posted on 11 Jan 2007

Functional Style Programming with C#

C# 3.0 introduces a number of small enhancements to the language. The combination of these enhancements is what drives the LINQ.

Although much of the focus has been on the SQL-like feeling that it gives the language to manipulate collections, XML and databases in an efficient way, some fascinating side effects are explored in this tutorial.

The tutorial introduces the new features in C# one by one, there are a couple of interesting examples, a simple functional-style loop:

// From: for (int i = 1; i < 10; ++i) Console.WriteLine(i); // To: Sequence.Range(1, 10).ForEach(i => Console.WriteLine(i));

A nice introduction to delayed evaluation, an RPN calculator:

// the following computes (5*2)-1

Token[] tkns = {

new Token(5),

new Token(2),

new Token(TokenType.Multiply),

new Token(1),

new Token(TokenType.Subtract)

};

Stack st = new Stack();

// The RPN Token Processor

tkns.Switch(

i => (int)i.Type,

s => st.Push(s.Operand),

s => st.Push(st.Pop() + st.Pop()),

s => st.Push(-st.Pop() + st.Pop()),

s => st.Push(st.Pop() * st.Pop()),

s => st.Push(1/st.Pop() * st.Pop())

);

Console.WriteLine(st.Pop());

And finally a section on how to parse WordML using LINQ, extracting the text:

wordDoc.Element(w + "body").Descendants(w + "p").

Select(p => new {

ParagraphNode = p,

Style = GetParagraphStyle(p),

ParaText = p.Elements(w + "r").Elements(w + "t").

Aggregate("", (s1, i1) => s1 = s1 + (string)i1)}).

Foreach(p =>

Console.WriteLine("{0} {1}", p.Style.PadRight(12), p.ParaText));

Very educational read.

Posted on 10 Jan 2007

SecondLife: Cory Pre-Town Hall Answers

After the release of

the SecondLife client as open source software, Cory has a

pre-town

hall answers post.

After the release of

the SecondLife client as open source software, Cory has a

pre-town

hall answers post.

The SecondLife client is dual licensed under the GPL and commercial licenses.

Regarding the use of Mono, Cory states:

Open Sourcing the client does not impact continued development of Mono or other planned improvements to LSL. Although Mono is also an Open Source project, that code will be running on the server, not the client. As has been previously discussed, part of the reason for going to Mono and the Common Language Runtime is to eventually allow more flexibility in scripting language choice.

Cory explains some of the rationale for open sourcing the client:

Third, security. While many of you raised question about security, the reality is that Open Source will result in a more secure viewer and Second Life for everyone. Will exploits be found as a result of code examination? Almost certainly, but the odds are far higher than the person discovering the bug is someone working to make Second Life better. Would those exploits have been found by reverse engineering in order to crack the client? Yes, but with a far smaller chance that the exploit will be made public. Also, as part of the preparation for Open Source, we conducted a security audit and took other precautions to mitigate the security risks.Fourth, as we continue to scale the Second Life development team --- and thank you to the many folks who have helped to get our hiring pipeline humming again --- Open Source becomes a great way for potential developers to demonstrate their skill and creativity with our code. Moreover, it makes it even easier for remote developers to become part of Linden Lab. The possibility space for Second Life is enormous, so the more development horsepower we can apply to it --- whether working for Linden Lab or now --- the faster we all can take Second Life from where it is today into the future.

And also, a new book on SecondLife is coming out.

Posted on 10 Jan 2007

Rolf's Visual Basic 8 compiler: Self Hosting on Mono

Rolf has committed to SVN his latest batch of changes that allowed his Visual Basic.NET compiler to bootstrap under Mono/Linux. This basically means that the full cycle for VB in Mono has now been completed.

Rolf's VB compiler is an implementation of Visual Basic.NET version 8. So it has support for generics and all the new stuff in the language.

Rolf wrote his compiler in VB itself, and he uses generics everywhere, so in addition of being a good self-test, it has proven to be a good test for Mono (he already has identified a few spots where the Mono JIT should be tuned).

The following are from an internal email he sent on Friday (am trying to convince him to have a blog and blog these fun facts):

Friday Results:

compile vbnc with vbc: 4.8 seconds

compile vbnc with vbnc/MS: 14.2 seconds

compile vbnc with vbnc/Mono/Linux: 15.1 seconds

compile vbnc using a bootstrapped binary: 19.0 seconds

compile vbruntime with vbc: 1.4 seconds

compile vbruntime with vbnc/MS: 3.5 seconds

compile vbruntime with vbnc/Mono/Linux: 4.2 seconds

compile vbruntime with bootstrapped binary: 4.9 seconds

The memory consumption on Friday was at 475 megs of RAM. Ben and Atsushi provided some suggestions, and the results for Monday are:

compile vbnc using a bootstrapped binary: 10.0 seconds Memory usage: 264 MB

In managed applications memory allocations are important, because they have an impact on performance. Also notice that memory usage as reported by the Mono default profiler, means all the memory allocation. The actual working set is much smaller, since the GC will reuse all unused memory.

Atsushi also has a few tune-ups to the string class that reduces plenty of the extra allocations that the compiler was incurring into.

To bootstrap your own VBNC compiler, get the vbnc module, then go into the vbnc/bin directory, and run make bootstrap.

Bonus: in addition to bootstrapping itself, the compiler can now compile the Microsoft.VisualBasic runtime (the new version is written in VB.NET as well). And can do a full bootstrap with the new runtime as well.

Although binaries compiled with Microsoft's VB.NET compiler would run unmodified on Linux, it was not possible to use VB code in ASP.NET applications (as those get compiled on the first page hit) or if developers wanted to debug and tune up a few things on their target Linux/OSX machine.

In the next few weeks we hope to have this packaged.

Posted on 09 Jan 2007

Development Exchange: Evolution and Mono.

I will exchange an equivalent number of hours hacking your favorite Mono feature in exchange for someone implementing Google Calendar support into Evolution.

The APIs to use Google calendar service are here.

Meta-proposal: someone writes a "Software Contribution Exchange" (SCE) where people offer patches to software in exchange for patches in other applications. The NASDAQ of patches.

Posted on 09 Jan 2007

Blog Comments

I just had a stroke of genius.

My blog software is this home-grown tiny program that generates static HTML, and never quite bothered to turn that into a server application for the sake of supporting comments.

So I was looking to host comments on some comment hosting provider like HaloScan, I signed up, registered but was not very happy with the interface offered to posters. For anything more than a small form, you have to upgrade the basic account.

The stroke of genius was to create a Google Group for my blog and just link to it. That way I get adequate tools to manage the comments, and posters get adequate tools to track it.

Thanks, thanks; you are welcome; I know!; yes, yes; I love that show too. You can just put your donation in the hat.

The open question is whether the comment link should only appear on the blog, or it should also appear at the end of each entry on the RSS feed, for the sake of those using things like Google Reader. Discuss among yourselves.

Posted on 06 Jan 2007

Billmon Archives

Seems like my favorite blog Billmon is off the air.

Since Billmon hinted for a while that he was going to stop blogging, I made a backup of his site a few days before he shut down the site.

If you are a Billmon fan, and want to get a copy of the archive I made, you can get it here (Hosted at Coral).

Posted on 06 Jan 2007

Assorted Links

Aaron Bockover has a couple of interesting posts on the Banshee music player:

- Scripting Banshee. Aaron embedded the Boo language into Banshee to help prototype, test and extend it.

- Audio

Profile Configuration for the Masses: in which he

presents his work to provide a user friendly interface

to configuring your underlying media platform.

Screencast and screenshots included. - Radio Support in Banshee. Check his description of the implementation, but most importantly of his use of the Rhapsody Web Services to match the radio music with cover art, artist information and more.

Aaron has shown his craftsmanship in building Banshee, which is a labor of love.

Paco posted a few screenshots of Gtk SQL# a tool to interact with SQL databases using the System.Data API.

The Mono.Xna developers continue to make progress. Alan blogs about the progress here.

Jonathan Pobst will be joining the Managed Windows.Forms team at Novell on Monday. Jonathan has been a Windows.Forms 2.0 contributor and also developed the Mono Migration Assistant tool (Moma).

Posted on 06 Jan 2007

OpenSUSE 10.2 and Mono VMWare Image.

We have upgraded our VMWare Mono Image from SLED to OpenSUSE 10.2. In the process we fixed most of the problems that were reported by our Windows users doing their .NET migration from Windows to Linux. Please keep sending us your feedback!

I have also migrated my desktop computer at home to OpenSUSE 10.2 (my laptop remains in SLED-land for now), and here are some personal experiences.

Duncan improved my computing experience ten times by suggesting that I install the Smart package management system for OpenSUSE (Smart home page is here).

Smart is fantastic, and I like it a bit better than apt-get so far (still got apt-get installed, but I have switched to smart for my package management now).

The second piece of advise from Duncan was to configure the Additional Package Repositories. Which is a fantastic universe of packages for OpenSUSE, pretty much anything under the sun is packaged there. I accidentally ran into the "GuitarScaleAssistant" while trying out the smart search features. GuitarScaleAssistant: lovely piece of software.

Jonathan Pryor has also a blog entry on caring and feeding your new OpenSUSE 10.2 installation, which should be very useful to Windows guys migrating to Linux.

Posted on 06 Jan 2007

Blog Search

Archive

- 2024

Apr Jun - 2020

Mar Aug Sep - 2018

Jan Feb Apr May Dec - 2016

Jan Feb Jul Sep - 2014

Jan Apr May Jul Aug Sep Oct Nov Dec - 2012

Feb Mar Apr Aug Sep Oct Nov - 2010

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2008

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2006

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2004

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2002

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Dec

- 2022

Apr - 2019

Mar Apr - 2017

Jan Nov Dec - 2015

Jan Jul Aug Sep Oct Dec - 2013

Feb Mar Apr Jun Aug Oct - 2011

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2009

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2007

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2005

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2003

Jan Feb Mar Apr Jun Jul Aug Sep Oct Nov Dec - 2001

Apr May Jun Jul Aug Sep Oct Nov Dec