How we doubled Mono’s Float Speed

My friend Aras recently wrote the same ray tracer in various languages, including C++, C# and the upcoming Unity Burst compiler. While it is natural to expect C# to be slower than C++, what was interesting to me was that Mono was so much slower than .NET Core.

The numbers that he posted did not look good:

- C# (.NET Core): Mac 17.5 Mray/s,

- C# (Unity, Mono): Mac 4.6 Mray/s,

- C# (Unity, IL2CPP): Mac 17.1 Mray/s,

I decided to look at what was going on, and document possible areas for improvement.

As a result of this benchmark, and looking into this problem, we identified three areas of improvement:

- First, we need better defaults in Mono, as users will not tune their parameters

- Second, we need to better inform the world about the LLVM code optimizing backend in Mono

- Third, we tuned some of the parameters in Mono.

The baseline for this test, was to run Aras ray tracer on my machine, since we have different hardware, I could not use his numbers to compare. The results on my iMac at home were as follows for Mono and .NET Core:

| Runtime | Results MRay/sec |

|---|---|

.NET Core 2.1.4, debug build dotnet run |

3.6 |

.NET Core 2.1.4, release build, dotnet run -c Release |

21.7 |

Vanilla Mono, mono Maths.exe |

6.6 |

| Vanilla Mono, with LLVM and float32 | 15.5 |

During the process of researching this problem, we found a couple of problems, which once we fixed, produced the following results:

| Runtime | Results MRay/sec |

|---|---|

| Mono with LLVM and float32 | 15.5 |

| Improved Mono with LLVM, float32 and fixed inline | 29.6 |

Aggregated:

Just using LLVM and float32 your code can get almost a 2.3x performance improvement in your floating point code. And with the tuning that we added to Mono’s as a result of this exercise, you can get 4.4x over running the plain Mono - these will be the defaults in future versions of Mono.

This blog post explains our findings.

32 and 64 bit Floats

Aras is using 32-bit floats for most of his math (the float type in

C#, or System.Single in .NET terms). In Mono, decades ago, we made

the mistake of performing all 32-bit float computations as 64-bit

floats while still storing the data in 32-bit locations.

My memory at this point is not as good as it used to be and do not quite recall why we made this decision.

My best guess is that it was a decision rooted in the trends and ideas of the time.

Around this time there was a positive aura around extended precision computations for floats. For example the Intel x87 processors use 80-bit precision for their floating point computations, even when the operands are doubles, giving users better results.

Another theme around that time was that the Gnumeric spreadsheet, one of my previous projects, had implemented better statistical functions than Excel had, and this was well received in many communities that could use the more correct and higher precision results.

In the early days of Mono, most mathematical operations available

across all platforms only took doubles as inputs. C99, Posix and ISO

had all introduced 32-bit versions, but they were not generally

available across the industry in those early days (for example, sinf

is the float version of sin, fabsf of fabs and so on).

In short, the early 2000’s were a time of optimism.

Applications did pay a heavier price for the extra computation time, but Mono was mostly used for Linux desktop application, serving HTTP pages and some server processes, so floating point performance was never an issue we faced day to day. It was only noticeable in some scientific benchmarks, and those were rarely the use case for .NET usage in the 2003 era.

Nowadays, Games, 3D applications image processing, VR, AR and machine learning have made floating point operations a more common data type in modern applications. When it rains, it pours, and this is no exception. Floats are no longer your friendly data type that you sprinkle in a few places in your code, here and there. They come in an avalanche and there is no place to hide. There are so many of them, and they won’t stop coming at you.

The “float32” runtime flag

So a couple of years ago we decided to add support for performing

32-bit float operations with 32-bit operations, just like everyone

else. We call this runtime feature “float32”, and in Mono, you enable

this by passing the --O=float32 option to the runtime, and for

Xamarin applications, you change this setting on the project

preferences.

This new flag has been well received by our mobile users, as the majority of mobile devices are still not very powerful and they rather process data faster than they need the precision. Our guidance for our mobile users has been both to turn on the LLVM optimizing compiler and float32 flag at the same time.

While we have had the flag for some years, we have not made this the default, to reduce surprises for our users. But we find ourselves facing scenarios where the current 64-bit behavior is already surprises to our users, for example, see this bug report filed by a Unity user.

We are now going to change the default in Mono to be float32, you

can track the progress here: https://github.com/mono/mono/issues/6985.

In the meantime, I went back to my friend Aras project. He has been

using some new APIs that were introduced in .NET Core. While .NET

core always performed 32-bit float operations as 32-bit floats, the

System.Math API still forced some conversions from float to

double in the course of doing business. For example, if you wanted

to compute the sine function of a float, your only choice was to call

Math.Sin (double) and pay the price of the float to double

conversion.

To address this, .NET Core has introduced a new System.MathF type,

which contains single precision floating point math operations, and we

have just brought this

[System.MathF](https://github.com/mono/mono/pull/7941) to

Mono now.

While moving from 64 bit floats to 32 bit floats certainly improves the performance, as you can see in the table below:

| Runtime and Options | Mrays/second |

|---|---|

| Mono with System.Math | 6.6 |

Mono with System.Math, using -O=float32 |

8.1 |

| Mono with System.MathF | 6.5 |

Mono with System.MathF, using -O=float32 |

8.2 |

So using float32 really improves things for this test, the MathF had

a small effect.

Tuning LLVM

During the course of this research, we discovered that while Mono’s

Fast JIT compiler had support for float32, we had not added this

support to the LLVM backend. This meant that Mono with LLVM was still

performing the expensive float to double conversions.

So Zoltan added support for float32 to our LLVM code generation

engine.

Then he noticed that our inliner was using the same heuristics for the Fast JIT than it was using for LLVM. With the Fast JIT, you want to strike a balance between JIT speed and execution speed, so we limit just how much we inline to reduce the work of the JIT engine.

But when you are opt into using LLVM with Mono, you want to get the

fastest code possible, so we adjusted the setting accordingly. Today

you can change this setting via an environment variable

MONO_INLINELIMIT, but this really should be baked into the defaults.

With the tuned LLVM setting, these are the results:

| Runtime and Options | Mrays/seconds |

|---|---|

Mono with System.Math --llvm -O=float32 |

16.0 |

Mono with System.Math --llvm -O=float32 fixed heuristics |

29.1 |

Mono with System.MathF --llvm -O=float32 fixed heuristics |

29.6 |

Next Steps

The work to bring some of these improvements was relatively low. We

had some on and off discussions on Slack which lead to these

improvements. I even managed to spend a few hours one evening to

bring System.MathF to Mono.

Aras RayTracer code was an ideal subject to study, as it was self-contained, it was a real application and not a synthetic benchmark. We want to find more software like this that we can use to review the kind of bitcode that we generate and make sure that we are giving LLVM the best data that we can so LLVM can do its job.

We also are considering upgrading the LLVM that we use, and leverage any new optimizations that have been added.

SideBar

The extra precision has some nice side effects. For example, recently, while reading the pull requests for the Godot engine, I saw that they were busily discussing making floating point precision for the engine configurable at compile time (https://github.com/godotengine/godot/pull/17134).

I asked Juan why anyone would want to do this, I thought that games were just content with 32-bit floating point operations.

Juan explained to that while floats work great in general, once you “move away” from the center, say in a game, you navigate 100 kilometers out of the center of your game, the math errors start to accumulate and you end up with some interesting visual glitches. There are various mitigation strategies that can be used, and higher precision is just one possibility, one that comes with a performance cost.

Shortly after our conversation, this blog showed up on my Twitter timeline showing this problem:

http://pharr.org/matt/blog/2018/03/02/rendering-in-camera-space.html

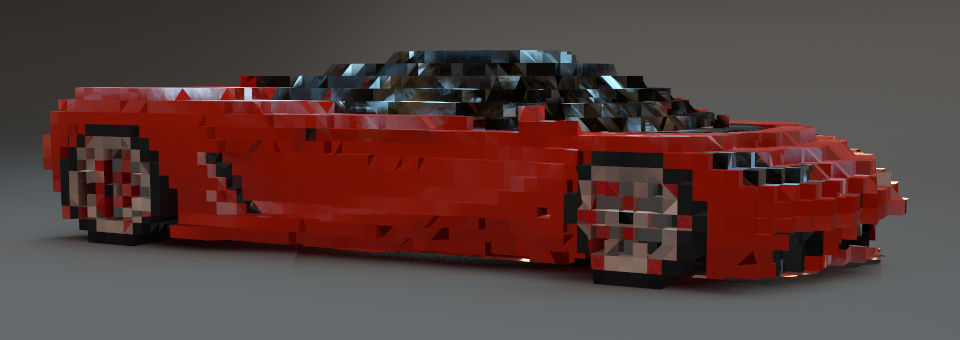

A few images show the problem. First, we have a sports car model from the pbrt-v3-scenes **distribution. Both the camera and the scene are near the origin and everything looks good.

** (Cool sports car model courtesy Yasutoshi Mori.)

Next, we’ve translated both the camera and the scene 200,000 units from the origin in xx, yy, and zz. We can see that the car model is getting fairly chunky; this is entirely due to insufficient floating-point precision.

** (Thanks again to Yasutoshi Mori.)

If we move 5×5× farther away, to 1 million units from the origin, things really fall apart; the car has become an extremely coarse voxelized approximation of itself—both cool and horrifying at the same time. (Keanu wonders: is Minecraft chunky purely because everything’s rendered really far from the origin?)

** (Apologies to Yasutoshi Mori for what has been done to his nice model.)

Posted on 11 Apr 2018

Blog Search

Archive

- 2024

Apr Jun - 2020

Mar Aug Sep - 2018

Jan Feb Apr May Dec - 2016

Jan Feb Jul Sep - 2014

Jan Apr May Jul Aug Sep Oct Nov Dec - 2012

Feb Mar Apr Aug Sep Oct Nov - 2010

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2008

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2006

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2004

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2002

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Dec

- 2022

Apr - 2019

Mar Apr - 2017

Jan Nov Dec - 2015

Jan Jul Aug Sep Oct Dec - 2013

Feb Mar Apr Jun Aug Oct - 2011

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2009

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2007

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2005

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2003

Jan Feb Mar Apr Jun Jul Aug Sep Oct Nov Dec - 2001

Apr May Jun Jul Aug Sep Oct Nov Dec