GovTrack.Us Interview with Joshua Tauberer

Jon Udell interviews Joshua Tauberer on his service GovTrack.us that helps citizens track legislation and voting in the US.

Posted on 28 Jul 2008

C# 3.0 and Parallel FX/LINQ in Mono

For a while I wanted to blog about the open source implementation of the Parallel Extensions for Mono that Jeremie Laval has been working on. Jeremie is one of our mentored students in the 2008 Google Summer of Code.

Update: Jeremie's code is available from our mono-soc-2008 repository.

Dual CPU laptops are becoming the norm; quad-core computers are now very affordable, and eight CPU machines are routinely purchased as developer workstations.

The Parallel Extension API makes it easy to prepare your software to run on multi processor machines by providing constructs that take care of distributing the work to various CPUs based on the computer load and the number of processors available.

There are various pieces in the Parallel Extensions framework, the simplest use case is Parallel.For, a loop construct that would execute the code in as optimally as possible given the number of processors available on the system.

Parallel.For is a simple replacement, you usually replace for loops that look like this:

for (int i = 0; i < N; i++)

BODY ();

With the following (this is using lambda expressions):

Parallel.For (0, N, i => BODY);

The above would iterate from 0 to N calling the code in BODY with the parameter scattered across various CPUs.

C# 3 and Parallel LINQ

Marek Safar recently announced that Mono C# compiler was now completely compliant with the 3.0 language specification.

In his announcement he

used Luke

Hoban's

brutal ray tracer-in-one-LINQ statement program. This was a

hard test case for our C# compiler to pass, but we are finally

there. I

had blogged

about it in the past. Luke Hoban's

ray-tracer-in-one-linq-statement looks like this:

var pixelsQuery = from y in Enumerable.Range(0, screenHeight) let recenterY = -(y - (screenHeight / 2.0)) / (2.0 * screenHeight) select from x in Enumerable.Range(0, screenWidth) let recenterX = (x - (screenWidth / 2.0)) / (2.0 * screenWidth) let point = Vector.Norm(Vector.Plus(scene.Camera.Forward,

Vector.Plus(Vector.Times(recenterX, scene.Camera.Right), Vector.Times(recenterY, scene.Camera.Up)))) let ray = new Ray { Start = scene.Camera.Pos, Dir = point } let computeTraceRay = (Func<Func<TraceRayArgs, Color>, Func<TraceRayArgs, Color>>) (f => traceRayArgs => (from isect in from thing in traceRayArgs.Scene.Things select thing.Intersect(traceRayArgs.Ray) where isect != null orderby isect.Dist let d = isect.Ray.Dir let pos = Vector.Plus(Vector.Times(isect.Dist, isect.Ray.Dir), isect.Ray.Start) let normal = isect.Thing.Normal(pos) let reflectDir = Vector.Minus(d, Vector.Times(2 * Vector.Dot(normal, d), normal)) let naturalColors =

from light in traceRayArgs.Scene.Lights let ldis = Vector.Minus(light.Pos, pos) let livec = Vector.Norm(ldis) let testRay = new Ray { Start = pos, Dir = livec } let testIsects = from inter in from thing in traceRayArgs.Scene.Things select thing.Intersect(testRay) where inter != null orderby inter.Dist select inter let testIsect = testIsects.FirstOrDefault() let neatIsect = testIsect == null ? 0 : testIsect.Dist let isInShadow = !((neatIsect > Vector.Mag(ldis)) || (neatIsect == 0)) where !isInShadow let illum = Vector.Dot(livec, normal) let lcolor = illum > 0 ? Color.Times(illum, light.Color) : Color.Make(0, 0, 0) let specular = Vector.Dot(livec, Vector.Norm(reflectDir)) let scolor = specular > 0 ? Color.Times(Math.Pow(specular, isect.Thing.Surface.Roughness), light.Color) : Color.Make(0, 0, 0) select Color.Plus( Color.Times(isect.Thing.Surface.Diffuse(pos), lcolor), Color.Times(isect.Thing.Surface.Specular(pos), scolor)) let reflectPos = Vector.Plus(pos, Vector.Times(.001, reflectDir)) let reflectColor =

traceRayArgs.Depth >= MaxDepth ? Color.Make(.5, .5, .5) : Color.Times(isect.Thing.Surface.Reflect(reflectPos), f(new TraceRayArgs(new Ray { Start = reflectPos, Dir = reflectDir }, traceRayArgs.Scene,

traceRayArgs.Depth + 1))) select naturalColors.Aggregate(reflectColor, (color, natColor) => Color.Plus(color, natColor)))

.DefaultIfEmpty(Color.Background).First()) let traceRay = Y(computeTraceRay) select new { X = x, Y = y, Color = traceRay(new TraceRayArgs(ray, scene, 0)) }; foreach (var row in pixelsQuery) foreach (var pixel in row) setPixel(pixel.X, pixel.Y, pixel.Color.ToDrawingColor());

And renders like this:

The above now compiles and runs as fast as it does on .NET.

Jeremie then modified the above program to use the parallel extensions to LINQ. He replaced Enumerable.Range with ParallelEnumerable.Range and foreach with the parallel ForAll method to take advantage of his library.

You can watch the above ray tracer with and without LINQ on his screencasts (LINQ ray tracer, Parallel LINQ ray tracer).

Tracking Parallel FX

There is much more information on the PFXTeam Blog. Another great blog to follow, in particular check out their Coordination Data Structures Overview, PLINQ Ordering and some demos.

Posted on 26 Jul 2008

Must follow blog

Another fantastic blog to follow: Fake Twitter Status.

Posted on 26 Jul 2008

Mono 2.0 branched, and the Linear IL

On Tuesday of last week we branched Mono for the 2.0 release; Packages are being QAed for our first release candidate and will be available next week. Bug fixing for the final release will happen on this branch.

Meanwhile, the excitement continues on trunk. Zoltan today merged the Linear IL branch.

The Linear IL code has been under development for two years and eight months and it was an effort that we started to address some of the main limitations in our current JIT design. Some of these limitations in the old design made it very hard to bring some code generation optimizations into the JIT, or made the optimizations not as effective as they could have been.

The new JIT engine will debut in Mono 2.1, later this year. Now that Linear IL is the default, the entire JIT team will focus on tuning the engine and extracting more performance out of it. But even without tuning, the new engine is already performing very well as you can see in the results comparing the engines.

Additionally, a number of creative ideas that we have to improve Mono all depended on doing this switch. We have a few surprises for developers in the next coming months.

Congratulations to Zoltan for getting this work merged.

Posted on 22 Jul 2008

C# 4.0 Design

From a recent interview on the design team for C# 4.0 Anders said about the room they meet to discuss the C# design:

We have been meeting in this room for nine years, three times a week.

This seems to be one of the reasons C# has evolved so nicely.

Sadly there are no actual details on the interview about what is coming up on C# 4.0. We have to wait until the PDC to get an idea of what will be coming.

Luckily, Mono's C# compiler is already 3.0 compliant, and we are ready to start adding 4.0 features the moment they become public.

Posted on 21 Jul 2008

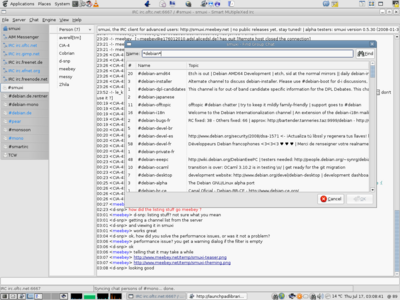

Smuxi: a new IRC client

My friend Mirco Bauer has been maintaining and coordinating the Mono packaging for Debian for many years.

Today

he released

smuxi. His own IRC client that he built on top of Gtk#:

Posted on 21 Jul 2008

Gtk+ 3.0, take 2

Emmanuele Bassi has summarized a discussion that happened on IRC after my Gtk+ 3.0 post.

His blog entry starts by saying that we should not use blogs to discuss and then goes on to discuss. I agree with the sentiment, but IRC is not a good place to do the meeting either as we do not even have IRC logs for whatever channel they were on discussing.

It is about the ISVs

Emmanuele seems to think that this is a marketing problem. It is not.

This is about the effect that the current Gtk+ 3.0 plan has on ISVs.

KDE has almost no ISVs, Qt does.

GNOME has almost no ISVs, Gtk+ does.

Most likely because anything beyond the core toolkit is too unstable in both cases, and because things are too quickly flagged as deprecated with no roadmap in place.

The Qt situation is much better, as it is commercially designed, and they have existing customers that are paying them money to solve problems for them, not introduce new ones.

Qt is also designed to be bundled with your application, and you can make your proprietary application not break if the user upgrades his Qt. This is not the modus operandi for Gtk+.

Having an "abandoned Gtk 2.x" and a "maintained, but API and ABI incompatible 3.x" which will not be available everywhere at the same time is a major turn off for ISVs.

Creating an ISV ecosystem is incredibly hard, and somehow the new generation of Gtk+ developers is now "OK" to throw away years of work of those that had to work with fewer resources than Gnome has today, fewer developers, a smaller community, slower computers, bigger challenges and yet, managed to keep Gtk+ 2.0 API compatible.

Perhaps it is not a matter of being "OK", but the new crop of Gtk+ developers does just not appreciate just how much value ISVs are for Gtk+, Gnome and the Linux desktop in the first place. They did not have to fight to get those guys on board on the first place.

The premises and the conclusions of Imendio's paper would not hold if you were to consider application developers in the mix. But in particular, it seems that the mindset is dangerously close to the rationalization used recently by a KDE spokesperson and lampooned by the Linux Hater Blog.

What bothered me last night

What bothered me last night after I blogged was the realization that most of the Imendio developers have switched to OSX as their main desktop operating system (At least rhult, hallski and kris).

These are great developers, but for their day-to-day activities, they have given up on the Linux/Gnome desktop. Their concern is no longer to attract ISVs, as long as the source compiles with some changes, they will be OK.

There are certainly some developers at Imendio that still use Linux, and I am sure they have a "Linux partition" to test things out. But when it comes to ensuring the viability of the Linux desktop ecosystem, I do not feel comfortable about wiping out the ISV ecosystem that we have.

Discussion

Emmanuele says:

for instance, I would have loved to have Miguel at the gtk+ team meeting of Tuesday at GUADEC: it would have been a great discussion, I’m sure of it, and we might have had a different state of the union talk.

I mentioned this problem in my previous blog entry. Even if I had made it to Istanbul on Tuesday, I am merely one of the voices concerned about API stability. "Tuesday Meeting at Guadec" is hardly inclusive:

There was no Adobe.

There was no VMware.

There was no Medsphere.

There were no Eclipse folks (who have complained previously about the ABI/API issues).

There was no Gnumeric.

And these are the ones I can think of the top of my head.

Senior voices from our own community were missing, like Morten Welinder who has expressed his opinion in a shorter post:

The best thing about tabs that I can think of is that it will keep certain people from doing more harmful things like changing the gtk+ api for no good reason.

I do not know who attended the Gtk+ planning on Tuesday, but it was not inclusive, and I suspect it was heavily tilted towards the Nokia-ecosystem.

From a Nokia standpoint, I understand the desire of dropping older code, get a smaller version of Gtk+ out there, and be able to get a very flashy system at all costs. The iPhone and OSX are strong UIs, and I can understand the desire to compete, but lets not throw the baby with the bathwater.

Decisions about the future of Gtk+ can not be done without all the stakeholders, and specially without those that have worked for years in keeping the API stability under duress and have built applications on top of it.

Features

Emmanuele says:

Yes, 3.0.0 might not have features. is this bad marketing? probably. so we need to fix this. a way1 to do this would be keeping the 3.0.0 in alpha state, call it 2.99.02 and add features to that until we get to a 3.0.0 that developers will want to migrate to, like the new scenegraph API or the new style API. let’s break with 2.x in style

As I said previously, I would endorse such a plan if it is shown that fundamental new features could not be implemented in an API/ABI compatible way. Nobody has yet refuted my assessment of the various areas that would not break compatibility, and that covers most of the new features.

Although I am not the only stake holder, nor the only ISV, nor the only developer.

Communication

Emmanuele says:

communication: there’s a certain lack of communication between the gtk+ team and the users of the library. in my opinion, it’s due to the small number of active developers and to the fact that ISVs don’t really get involved into shaping the platform they are using. they have the source code, and sometimes it’s easier to fix in-house than to communicate and go through the proper process — and this is a structural problem that is caused by the small number of people involved in the said process as well. the gtk+ team needs to open up more, and at the same time the ISVs need to get more involved. sometimes it feels to me that the team is waiting for features, direction and help in the development, while the users of the library are waiting for the team to come up with the perfect plan to fix all the bugs and warts while retaining the whole API and ABI.

I agree with Emmanuele.

We setup the GNOME Foundation for things like this; Lets use the GNOME Foundation organizational powers to reach out to ISVs; to organize a platform and Gtk+ summit as it is now clearly needed; Lets include all the stakeholders, not only the active developers.

Process

Emmanuele says:

process: this is connected to the first point - we have a lot of channels, and it might be daunting to actually follow them all; but we're also open in terms of discussion and revision. this is our strength. so please: if you want to discuss, join the IRC meetings on the #gtk-devel channel on Tuesday at 20:00 UTC or send an email to gtk-devel-list with your points. get involved. help shaping the future. don’t stand idly by, and wait for stuff to break to complain.

Casual discussion on IRC is OK, but that should not be the repository for decision making for such a fundamental component of GNOME and the Linux desktop.

Perhaps the discussion can start on IRC, but minutes, summaries and decisions should be posted to the Gtk+ developers and users mailing list and given enough time for all the stake holders to participate.

Additionally, you can not expect that your blog has now reached all the ISVs, not even the gtk-devel-list (which is presumably a mailing list for the developers of Gtk+ not for its users).

We need to have a mailing list discussion, and then we need to have an outreach program to get to all stakeholders, including the ISVs to formulate a plan.

Posted on 15 Jul 2008

Gtk+ 3.0

The Gtk+ 3.0 proposal being discussed currently sounds like a disaster for GNOME. The reasoning was first articulated in the histrionic Imendio Gtk+ 3.0 Vision presentation done at the Gtk+ Hackfest in Berlin. This is a meeting where application developers were underrepresented, and somehow we have accepted those proposals as the community consensus.

The proposal goes like this: Gtk+ 3.0 will hide all public fields in objects, provide accessors to these, remove APIs that have been flagged as deprecated and introduce no new features.

All the actual new features that some people want would come up in future versions. Which features will come is yet to be decided, because nobody knows when and what will be implemented.

There are lots of technical problems with the proposal, and from my discussions this week at GUADEC it does not seem that the Gtk+ developers have discussed the implications with the users of Gtk+.

There is a major strategic problem with the plan as well. The most important one is that there is no actual plan for which features will be added, and when these features will be added. There are no prototype implementations, and the idea of first developing the new features in a branch to actually study the code, the implementation and its potential APi breakage is not even on the agenda.

Basically, we are being told that we should trust that first breaking the API and hiding fields will suddenly enable a whole generation of new features to be implemented.

But it gets better. There are no guarantees that 3.x will not break the API if the feature in question turns out to require API breakage. Which means that we might actually end up in a situation where there will be multiple API breakages.

This by all means sounds like a bad plan.

Towards a Better Plan for Gtk+ 3.0

I am not against breaking the API for newer versions of Gtk+ if the benefits outweigh the downsides, and the execution presented is not bad. But "3.0 will help us clean up" is not a good enough reason.

We need:

- A Clear Roadmap: We need a clear roadmap of

which features will be implemented and when they will

be implemented. This is the first step to making

decisions.

A clear roadmap would also allow new developers to join the efforts and big companies like Red Hat and Novell to dedicate some full time resources to make some of these feature happen. As things stand today, we are basically being told to "wait-until-3.0-then-we-will-sort-it-out".

- Working Code: Before a feature for a future

version of Gtk+ can be considered a reason to break

the API, the technology must exist in the form of a

patch/branch.

This is not new, and should be of no surprise to anyone. This is how the Cairo integration into Gtk+ was done, how the layout changes are being implemented and how every other software product is ran. - New API-breaking features must be publicly

discussed with the community: Since this will

affect every application developer (open source and

proprietary) we should get the feedbacks of these

communities.

We could all get as excited as we want about a "gboolean bouncing_text" field in a GtkLabel, but that does not mean that we should break the API for the sake of it.

Application developers were underrepresented at the Berlin hackfest, and even GUADEC is a microcosm of the ISVs that we had to reach. For instance, I did not see anyone from Adobe, VMware or Medsphere at the conference. - Transitional 2.x Releases: For a long-enough period to get distributions to include the code, Gtk+ 2.x should include getter/setter macros to allow software developers to upgrade their source code to work with and without the public fields. To soft-land the switch to a hiding-field Gtk release (this is covered in the Imendio proposal).

This is by no means a comprehensive plan, it is only the beginning of a plan.

Lets please avoid inflicting in GNOME a KDE 4.0 (yes, I know its not the exact same scenario; and yes, I know those clock applets are cute).

Gtk+ Extensions

From looking at the original Imendio proposal. it seems that plenty of the things they want can be implemented without breaking the API:

- Animation: doable entirely without breaking the API. The animation/storyboard framework is an entirely new codebase, that would not touch anything in Gtk+. Some widgets might require smoother rendering on changes, but all can be done in an API-compatible framework. public API.

- Physics: same, add-on.

- Data-binding: same, add-on.

- Easier language bindings: same, add-on.

- Improved back-ends, more OS integration: Can not think of a major issue, but granted, I could be missing something, and Imendio has done the OSX port.

And my own favorite: killing all Gtk+ theme engines, and replacing it with a Qt-like CSS theme engine. This is not really an API break, as the only consumers of this code are the theme engines, and those we can safely kill and replace with CSS themes, no application code would break.

Maybe Havoc's proposal requires an API breaking change. And maybe this is worth breaking the API for. But breaking it for no gain, and no prototype to even understand the actual effects post 3.0 is wrong.

Update: For what its worth, I would lean towards breaking compatibility in 3.0 if it meant 3.0 would include the Havoc-like Scene system. That would make it worthier of a change.

Update: As usual, the Linux Hater Blog has some great commentary. Some of his feedback on KDE 4.0 applies to our own decision making. Worth a read.

Posted on 14 Jul 2008

RIA BOF at GUADEC

Thanks to Behdad and the organizers at GUADEC, I will be having a BOF/discussion session tomorrow at 4:30pm to discuss a new class of applications built on Silverlight or Flash and how they relate to the future of the Linux Desktop.

Some of the ideas are clearly derived from Alex and Chris thinking about the desktop; it is heavily influenced by our work on Moonlight; by the recent strides that Adobe has made on creating great looking applications on the web (Buzzword and Photoshop Express) and the future of Gnome.

Join me tomorrow for a discussion on how to launch an effort to create an open-source, RIA-based desktop applications.

I am very excited.

Posted on 08 Jul 2008

Guadec/Istanbul; Rich Desktop Applications.

Next week I will be attending the GNOME Developer Conference in Istanbul.

Looking forward to meet old friends and looking forward to discuss with people the future of rich applications.

BOF: Does anyone know how to apply for a last-minute BOF?

If there is some free presentation slot, I would like to hold an informal BOF to discuss these ideas.

Posted on 04 Jul 2008

« Newer entries | Older entries »

Blog Search

Archive

- 2024

Apr Jun - 2020

Mar Aug Sep - 2018

Jan Feb Apr May Dec - 2016

Jan Feb Jul Sep - 2014

Jan Apr May Jul Aug Sep Oct Nov Dec - 2012

Feb Mar Apr Aug Sep Oct Nov - 2010

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2008

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2006

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2004

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2002

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Dec

- 2022

Apr - 2019

Mar Apr - 2017

Jan Nov Dec - 2015

Jan Jul Aug Sep Oct Dec - 2013

Feb Mar Apr Jun Aug Oct - 2011

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2009

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2007

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2005

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2003

Jan Feb Mar Apr Jun Jul Aug Sep Oct Nov Dec - 2001

Apr May Jun Jul Aug Sep Oct Nov Dec