.NET Foundation Changes

Today we announced a major change to the .NET Foundation, in which we fundamentally changed the way that the foundation operates. The new foundation draws inspiration from the Gnome Foundation and the F# Foundation.

We are making the following changes:

The Board of Directors of the Foundation will now be elected by the .NET Foundation membership, and they will be in charge of steering the direction of the foundation. The Board of Directors will be elected annually via direct vote from the members of the Foundation, with just one permanent member from Microsoft.

Anyone contributing to projects in the .NET Foundation can become a voting member of the Foundation. The main benefit is that you get to vote for who should represent you in the board of directors. To become a member, we will judge contributions to the projects in the foundation, which can either be code contributions, documentation, evangelism or other activities that advance .NET and its ecosystem.

Membership fee: we are adding a membership fee that will give the .NET Foundation independence from Microsoft when it comes to how it chooses to promote .NET and the ecosystem around it. We realize that not everyone can pay this fee, so this fee can be waived. But those that contribute to the Foundation will help us fund activities that will expand .NET.

We intend to have elections every year, so individuals will campaign on what they intend to bring to the board.

There is a limit in the number of members on the board representing a single company, which prevents the board from being stacked up by contributors for a single company, and will encourage our community to vote for board members with diverse backgrounds, strengthening the views of the board.

Companies do not vote. The only way to vote is for contributors to the .NET ecosystem, which could be affiliated with a company to vote, but the companies themselves have no vote. Our corporate sponsors are sponsors that care as much as we care as the growth and health of our ecosystem.

These changes are very close to my heart and took a lot of work to make them happen and make sure that Microsoft the company was comfortable with giving up the control over the .NET Foundation.

I want to thank Jon Galloway, the Executive Director of the current .NET Foundation to help make this a reality.

Going from the idea to the execution took a long time. Martin Woodward did some of the early foot work to get various people at Microsoft comfortable with the idea. Then Jon took over, and had to continue this process to get everyone on board and get everyone to accept that our little baby was ready to graduate, go to college and start its own independent life.

I want to thank my peers in the board of directors that supported this move, Scott Hunter, Oren Novotny, Rachel Reese as well as the entire supporting crew that helped us make this happen, Beth Massi, Jay Schmelzer and the various heroes in the Microsoft legal department that crossed all the t’s and dotted all the i’s.

See you on the campaign trail!

Posted on 04 Dec 2018

Startup Improvements in Xamarin.Forms on Android

With Xamarin.Forms 3.0 in addition to the many new feature work that we did, we have been doing some general optimizations across the board, from compile times to startup times and wanted to share some recent results on the net effect on one of our larger sample apps.

These are the results when doing a cold start for the SmartHotel360 application on Android when compiled for 32bits (armeabi-v7a) on a Google Pixel (1st gen).

| Release | Release/AOT | Release/AOT+LLVM | |

|---|---|---|---|

| Forms 2.5.0 | 6.59s | 1.66s | 1.61s |

| Forms 3.0.0 | 3.52s | 1.41s | 1.38s |

This is independent of the work that we are doing to improve Android's startup speed, that both brings additional benefits today, and will bring additional benefits in the future.

One of the areas that we are investing on for Android is to remove any dynamic code execution at startup to integrate with the Java runtime, instead all of this is being statically computed, similar to what we are doing on Mac and iOS where we completely eliminated reflection and code generation from startup.

Posted on 18 May 2018

How we doubled Mono’s Float Speed

My friend Aras recently wrote the same ray tracer in various languages, including C++, C# and the upcoming Unity Burst compiler. While it is natural to expect C# to be slower than C++, what was interesting to me was that Mono was so much slower than .NET Core.

The numbers that he posted did not look good:

- C# (.NET Core): Mac 17.5 Mray/s,

- C# (Unity, Mono): Mac 4.6 Mray/s,

- C# (Unity, IL2CPP): Mac 17.1 Mray/s,

I decided to look at what was going on, and document possible areas for improvement.

As a result of this benchmark, and looking into this problem, we identified three areas of improvement:

- First, we need better defaults in Mono, as users will not tune their parameters

- Second, we need to better inform the world about the LLVM code optimizing backend in Mono

- Third, we tuned some of the parameters in Mono.

The baseline for this test, was to run Aras ray tracer on my machine, since we have different hardware, I could not use his numbers to compare. The results on my iMac at home were as follows for Mono and .NET Core:

| Runtime | Results MRay/sec |

|---|---|

.NET Core 2.1.4, debug build dotnet run |

3.6 |

.NET Core 2.1.4, release build, dotnet run -c Release |

21.7 |

Vanilla Mono, mono Maths.exe |

6.6 |

| Vanilla Mono, with LLVM and float32 | 15.5 |

During the process of researching this problem, we found a couple of problems, which once we fixed, produced the following results:

| Runtime | Results MRay/sec |

|---|---|

| Mono with LLVM and float32 | 15.5 |

| Improved Mono with LLVM, float32 and fixed inline | 29.6 |

Aggregated:

Just using LLVM and float32 your code can get almost a 2.3x performance improvement in your floating point code. And with the tuning that we added to Mono’s as a result of this exercise, you can get 4.4x over running the plain Mono - these will be the defaults in future versions of Mono.

This blog post explains our findings.

32 and 64 bit Floats

Aras is using 32-bit floats for most of his math (the float type in

C#, or System.Single in .NET terms). In Mono, decades ago, we made

the mistake of performing all 32-bit float computations as 64-bit

floats while still storing the data in 32-bit locations.

My memory at this point is not as good as it used to be and do not quite recall why we made this decision.

My best guess is that it was a decision rooted in the trends and ideas of the time.

Around this time there was a positive aura around extended precision computations for floats. For example the Intel x87 processors use 80-bit precision for their floating point computations, even when the operands are doubles, giving users better results.

Another theme around that time was that the Gnumeric spreadsheet, one of my previous projects, had implemented better statistical functions than Excel had, and this was well received in many communities that could use the more correct and higher precision results.

In the early days of Mono, most mathematical operations available

across all platforms only took doubles as inputs. C99, Posix and ISO

had all introduced 32-bit versions, but they were not generally

available across the industry in those early days (for example, sinf

is the float version of sin, fabsf of fabs and so on).

In short, the early 2000’s were a time of optimism.

Applications did pay a heavier price for the extra computation time, but Mono was mostly used for Linux desktop application, serving HTTP pages and some server processes, so floating point performance was never an issue we faced day to day. It was only noticeable in some scientific benchmarks, and those were rarely the use case for .NET usage in the 2003 era.

Nowadays, Games, 3D applications image processing, VR, AR and machine learning have made floating point operations a more common data type in modern applications. When it rains, it pours, and this is no exception. Floats are no longer your friendly data type that you sprinkle in a few places in your code, here and there. They come in an avalanche and there is no place to hide. There are so many of them, and they won’t stop coming at you.

The “float32” runtime flag

So a couple of years ago we decided to add support for performing

32-bit float operations with 32-bit operations, just like everyone

else. We call this runtime feature “float32”, and in Mono, you enable

this by passing the --O=float32 option to the runtime, and for

Xamarin applications, you change this setting on the project

preferences.

This new flag has been well received by our mobile users, as the majority of mobile devices are still not very powerful and they rather process data faster than they need the precision. Our guidance for our mobile users has been both to turn on the LLVM optimizing compiler and float32 flag at the same time.

While we have had the flag for some years, we have not made this the default, to reduce surprises for our users. But we find ourselves facing scenarios where the current 64-bit behavior is already surprises to our users, for example, see this bug report filed by a Unity user.

We are now going to change the default in Mono to be float32, you

can track the progress here: https://github.com/mono/mono/issues/6985.

In the meantime, I went back to my friend Aras project. He has been

using some new APIs that were introduced in .NET Core. While .NET

core always performed 32-bit float operations as 32-bit floats, the

System.Math API still forced some conversions from float to

double in the course of doing business. For example, if you wanted

to compute the sine function of a float, your only choice was to call

Math.Sin (double) and pay the price of the float to double

conversion.

To address this, .NET Core has introduced a new System.MathF type,

which contains single precision floating point math operations, and we

have just brought this

[System.MathF](https://github.com/mono/mono/pull/7941) to

Mono now.

While moving from 64 bit floats to 32 bit floats certainly improves the performance, as you can see in the table below:

| Runtime and Options | Mrays/second |

|---|---|

| Mono with System.Math | 6.6 |

Mono with System.Math, using -O=float32 |

8.1 |

| Mono with System.MathF | 6.5 |

Mono with System.MathF, using -O=float32 |

8.2 |

So using float32 really improves things for this test, the MathF had

a small effect.

Tuning LLVM

During the course of this research, we discovered that while Mono’s

Fast JIT compiler had support for float32, we had not added this

support to the LLVM backend. This meant that Mono with LLVM was still

performing the expensive float to double conversions.

So Zoltan added support for float32 to our LLVM code generation

engine.

Then he noticed that our inliner was using the same heuristics for the Fast JIT than it was using for LLVM. With the Fast JIT, you want to strike a balance between JIT speed and execution speed, so we limit just how much we inline to reduce the work of the JIT engine.

But when you are opt into using LLVM with Mono, you want to get the

fastest code possible, so we adjusted the setting accordingly. Today

you can change this setting via an environment variable

MONO_INLINELIMIT, but this really should be baked into the defaults.

With the tuned LLVM setting, these are the results:

| Runtime and Options | Mrays/seconds |

|---|---|

Mono with System.Math --llvm -O=float32 |

16.0 |

Mono with System.Math --llvm -O=float32 fixed heuristics |

29.1 |

Mono with System.MathF --llvm -O=float32 fixed heuristics |

29.6 |

Next Steps

The work to bring some of these improvements was relatively low. We

had some on and off discussions on Slack which lead to these

improvements. I even managed to spend a few hours one evening to

bring System.MathF to Mono.

Aras RayTracer code was an ideal subject to study, as it was self-contained, it was a real application and not a synthetic benchmark. We want to find more software like this that we can use to review the kind of bitcode that we generate and make sure that we are giving LLVM the best data that we can so LLVM can do its job.

We also are considering upgrading the LLVM that we use, and leverage any new optimizations that have been added.

SideBar

The extra precision has some nice side effects. For example, recently, while reading the pull requests for the Godot engine, I saw that they were busily discussing making floating point precision for the engine configurable at compile time (https://github.com/godotengine/godot/pull/17134).

I asked Juan why anyone would want to do this, I thought that games were just content with 32-bit floating point operations.

Juan explained to that while floats work great in general, once you “move away” from the center, say in a game, you navigate 100 kilometers out of the center of your game, the math errors start to accumulate and you end up with some interesting visual glitches. There are various mitigation strategies that can be used, and higher precision is just one possibility, one that comes with a performance cost.

Shortly after our conversation, this blog showed up on my Twitter timeline showing this problem:

http://pharr.org/matt/blog/2018/03/02/rendering-in-camera-space.html

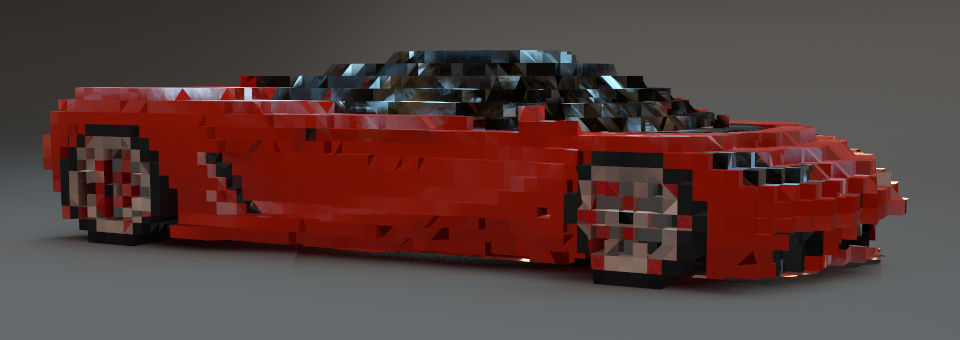

A few images show the problem. First, we have a sports car model from the pbrt-v3-scenes **distribution. Both the camera and the scene are near the origin and everything looks good.

** (Cool sports car model courtesy Yasutoshi Mori.)

Next, we’ve translated both the camera and the scene 200,000 units from the origin in xx, yy, and zz. We can see that the car model is getting fairly chunky; this is entirely due to insufficient floating-point precision.

** (Thanks again to Yasutoshi Mori.)

If we move 5×5× farther away, to 1 million units from the origin, things really fall apart; the car has become an extremely coarse voxelized approximation of itself—both cool and horrifying at the same time. (Keanu wonders: is Minecraft chunky purely because everything’s rendered really far from the origin?)

** (Apologies to Yasutoshi Mori for what has been done to his nice model.)

Posted on 11 Apr 2018

Fixing Screenshots in MacOS

This was driving me insane. For years, I have been using

Command-Shift-4 to take screenshots on my Mac. When you hit that

keypress, you get to select a region of the screen, and the result

gets placed on your ~/Desktop directory.

Recently, the feature stopped working.

I first blamed Dropbox settings, but that was not it.

I read every article on the internet on how to change the default

location, restart the SystemUIServer.

The screencapture command line tool worked, but not the hotkey.

Many reboots later, I disabled System Integrity Protection so I could

use iosnoop and dtruss to figure out why screencapture was not

logging. I was looking at the logs right there, and saw where things

were different, but could not figure out what was wrong.

Then another one of my Macs got infected. So now I had two Mac laptops that could not take screenshots.

And then I realized what was going on.

When you trigger Command-Shift-4, the TouchBar lights up and lets you customize how you take the screenshot, like this:

And if you scroll it, you get these other options:

And I had recently used these settings.

If you change your default here, it will be preserved, so even if the shortcut is Command-Shift-4 for take-screenshot-and-save-in-file, if you use the TouchBar to make a change, this will override any future uses of the command.

Posted on 04 Apr 2018

Magic!

Mono has a pure C# implementation of the Windows.Forms stack which works on Mac, Linux and Windows. It emulates some of the core of the Win32 API to achieve this.

While Mono's Windows.Forms is not an actively developed UI stack, it is required by a number of third party libraries, some data types are consumed by other Mono libraries (part of the original design contract), so we have kept it around.

On Mac, Mono's Windows.Forms was built on top of Carbon, an old C-based API that was available on MacOS. This backend was written by Geoff Norton for Mono many years ago.

As Mono switched to 64 bits by default, this meant that Windows.Forms could not be used. We have a couple of options, try to upgrade the 32-bit Carbon code to 64-bit Carbon code or build a new backend on top of Cocoa (using Xamarin.Mac).

For years, I had assumed that Carbon on 64 was not really supported, but a recent trip to the console shows that Apple has added a 64-bit port. Either my memory is failing me (common at this age), or Apple at some point changed their mind. I spent all of 20 minutes trying to do an upgrade, but the header files and documentation for the APIs we rely on are just not available, so at best, I would be doing some guess work as to which APIs have been upgraded to 64 bits, and which APIs are available (rumors on Google searches indicate that while Carbon is 64 bits, not all APIs might be there).

I figured that I could try to build a Cocoa backend with Xamarin.Mac, so I sent this pull request to let me do this outside of the Mono tree on my copious spare time, so this weekend I did some work on the Cocoa Driver.

But this morning, on twitter, Filip Navarra noticed the above, and contacted me:

@migueldeicaza We actually have a working Cocoa backend for Mono SWF (along with lot of fixes that we never ported upstream). It didn't make sense for us to release a big dump, but could be useful for your new effort...

— Filip Navara (@filipnavara) February 20, 2018

He has been kind enough to upload this Cocoa-based backend to GitHub.

Going Native

There are a couple of interesting things about this Windows.Forms backend for Cocoa.

The first one, is that it is using sysdrawing-coregraphics, a custom version of System.Drawing that we had originally developed for iOS users that implements the API in terms of CoreGraphics instead of using Cairo, FontConfig, FreeType and Pango.

The second one, is that some controls are backed by native AppKit controls, those that implement the IMacNativeControl interface. Among those you can find Button, ComboBox, ProgressBar, ScrollBar and the UpDownStepper.

I will now abandon my weekend hack, and instead get this code drop integrated as the 64-bit Cocoa backend.

Stay tuned!

Posted on 20 Feb 2018

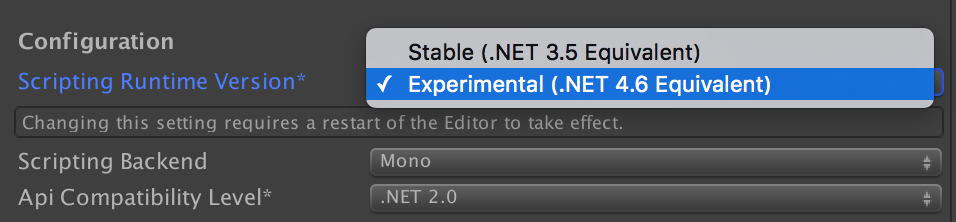

Why I am excited about Unity in 2018

Recently Aras shared his excitement for Unity in 2018. There is a ton on that blog post to unpack.

What I am personally excited about is that Unity now ships an up-to-date Mono in the core.

Jonathan Chambers and his team of amazing low-level VM hackers have been hard at work in upgrading Unity's VM and libraries to bring you the latest and greatest Mono runtime to Unity. We have had the privilege of assisting in this migration and providing them with technical support for this migration.

The work that the Unity team has done lays down the foundation for an ongoing update to their .NET capabilities, so future innovation on the platform can be quickly adopted, bringing new and more joyful capabilities to developers in the platform.

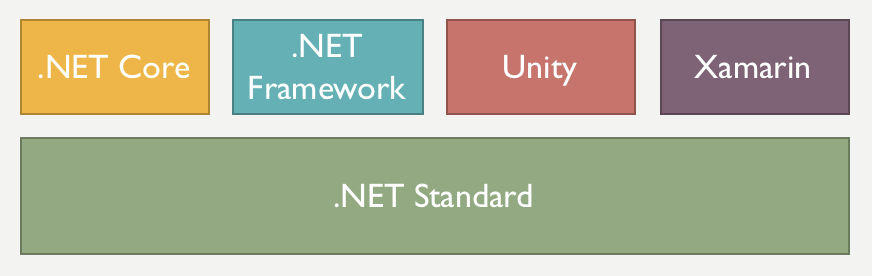

With this new runtime, Unity developers will be able to access and consume a large portion of third party .NET libraries, including all those shiny .NET Standard Libraries - the new universal way of sharing code in the .NET world.

C# 7

The Unity team has also provided very valuable guidance to the C# team which have directly influenced features in C# 7 like ref locals and returns

- In our own tests using C# for an AR application, we doubled the speed of managed-code AR processing by using these new features.

When users use the new Mono support in Unity, they default to C# 6, as this is the version that Mono's C# compiler fully supports. One of the challenges is that Mono's C# compiler has not fully implemented support for C# 7, as Mono itself moved to Roslyn.

The team at Unity is now working with the Roslyn team to adopt the Roslyn C# compiler in Unity. Because Roslyn is a larger compiler, it is a slower compiler to startup, and Unity does many small incremental compilations. So the team is working towards adopting the server compilation mode of Roslyn. This runs the Roslyn C# compiler as a reusable service which can compile code very quickly, without having to pay the price for startup every time.

Visual Studio

If you install the Unity beta today, you will also see that on Mac, it now defaults to Visual Studio for Mac as its default editor.

JB evain leads our Unity support for Visual Studio and he has brought the magic of his Unity plugin to Visual Studio for Mac.

As Unity upgrades its Mono runtime, they also benefit from the extended debugger protocol support in Mono, which bring years of improvements to the debugging experience.

Posted on 20 Feb 2018

Interactive Line Editing in .NET

Even these days, I still spend too much time on the command line. My friends still make fun of my MacOS desktop when they see that I run a full screen terminal, and the main program that I am running there is the Midnight Commander:

Every once in a while I write an interactive application, and I want to have full bash-like command line editing, history and search. The Unix world used to have GNU readline as a C library, but I wanted something that worked on both Unix and Windows with minimal dependencies.

Almost 10 years ago I wrote myself a C# library to do this, it works on both Unix and Windows and it was the library that has been used by Mono's interactive C# shell for the last decade or so.

This library used to be called

The idea of distributing libraries that were made up of a single source file did not really catch on. So we have modernized our own ways and now we publish these single-file libraries as NuGet packages that you can use.

You can now add an interactive command line shell with NuGet by installing the Mono.Terminal NuGet package into your application.

We also moved the single library from being part of the gigantic Mono repository into its own repository.

The GitHub page has more information on the key bindings available, how to use the history and how to add code-completion (even including a cute popup).

The library is built entirely on top of System.Console, and is

distributed as a .NET Standard library which can run on your choice of

.NET runtime (.NET Core, .NET Desktop or Mono).

Check the GitHub project page for more information.

Posted on 12 Jan 2018

Default ColorSpaces

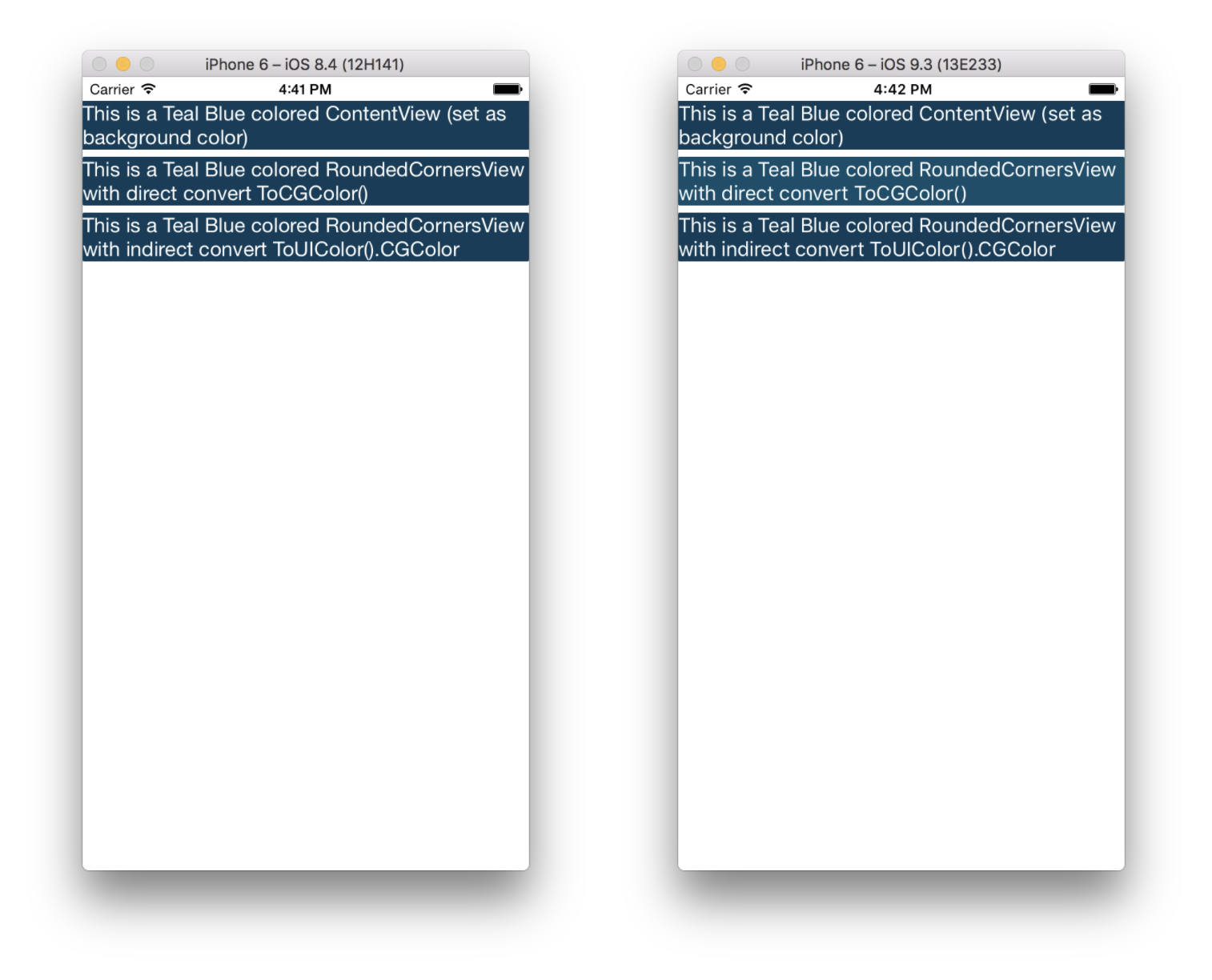

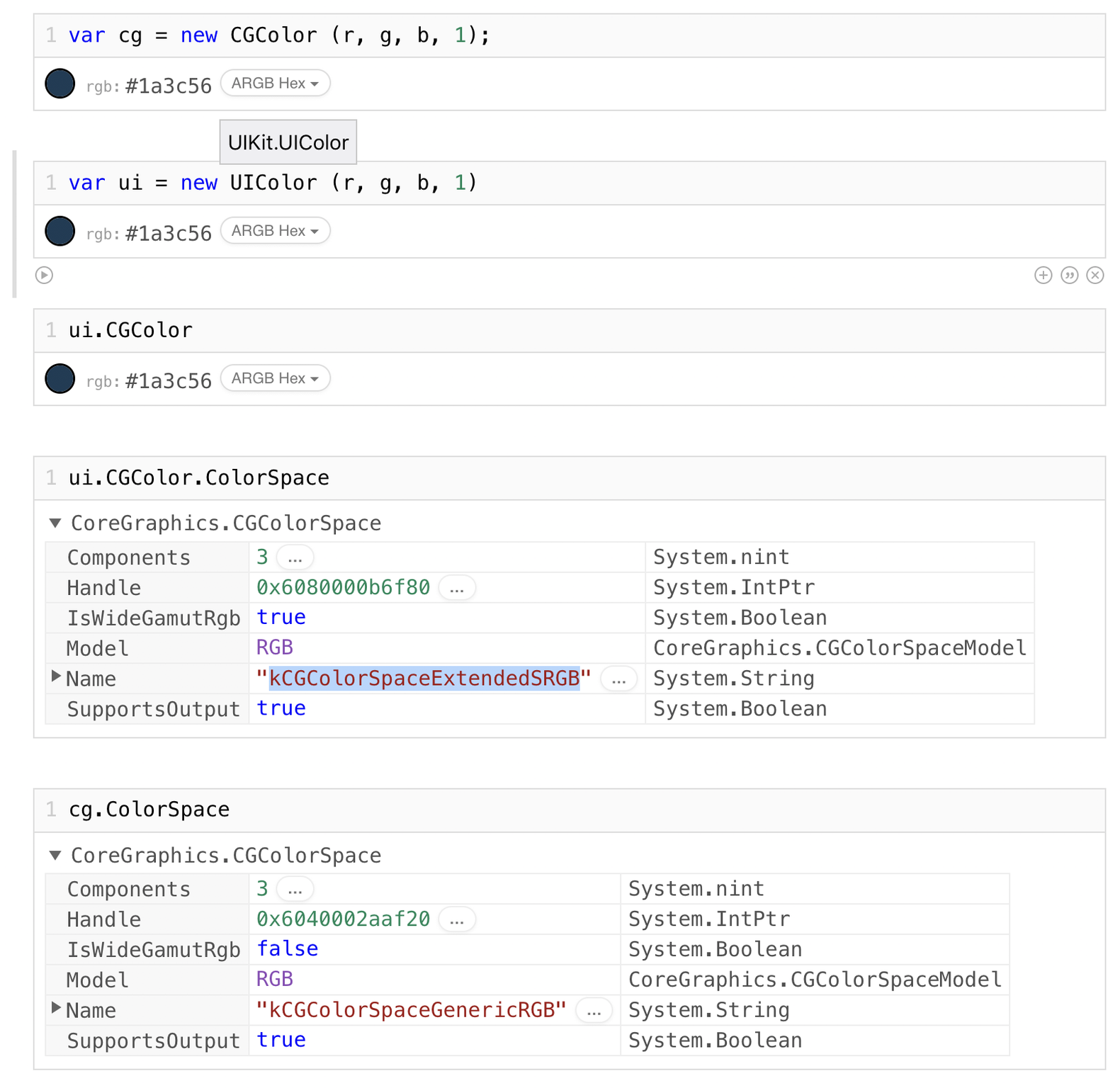

Recently a user filed a bug where the same RGB color when converted into a UIColor, and later into CGColor is different that going from the RGB value to a CGColor directly on recent versions of iOS.

You can see the difference here:

What is happening here is that CGColors that are created directly from the RGB values

are being created on kCGColorSpaceGenericRGB colorspace. Starting with iOS 10,

UIColor objects are being created with a device specific color space, in my

current simluator this value is kCGColorSpaceExtendedSRGB.

You can see the differences in this workbook

Posted on 07 Dec 2017

Mono's TLS 1.2 Update

Just wanted to close the chapter on Mono's TLS 1.2 support which I blogged about more than a year ago.

At the time, I shared the plans that we had for upgrading the support for TLS 1.2.

We released that code in Mono 4.8.0 in February of 2017 which used the BoringSSL stack on Linux and Apple's TLS stack on Xamarin.{Mac,iOS,tvOS,watchOS}.

In Mono 5.0.0 we extracted the TLS support from the Xamarin codebase

into the general Mono

codebase

and it became available as part of the Mono.framework distribution

as well as becoming the default.

Posted on 20 Nov 2017

Creating .NET Bindings for C Libraries with ObjectiveSharpie

We created the ObjectiveSharpie tool to automate the mapping of Objective-C APIs to the .NET world. This is the tool that we use to keep up with Apple APIs.

One of the lesser known features of ObjectiveSharpie, is that it is not limited to binding Objective-C header files. It is also capable of creating definitions for C APIs.

To do this, merely use the "bind" command for ObjectiveSharpie and run it on the header file for the API that you want to bind:

sharpie bind c-api.h -o binding.cs

The above command will produce the binding.cs that contains the C# definitions for both the native data structures and the C functions that can be invoked.

Since C APIs are ambiguous, in some cases ObjectiveSharpie will generate some diagnostics. In most cases it will flag methods that have to be bound with the [Verify]. This attribute is used as an indicator on your source code that you need to manually audit the binding, perhaps checking the documentation and adjust the P/Invoke signature accordingly.

There are various options that you can pass to the bind command, just invoke sharpie bind to get an up-to-date list of configuration options.

This is how I quickly bootstrapped the TensorFlowSharp binding. I got all the P/Invoke signatures done in one go, and then I started to do the work to surface an idiomatic C# API.

Posted on 18 Jan 2017

« Newer entries | Older entries »

Blog Search

Archive

- 2024

Apr Jun - 2020

Mar Aug Sep - 2018

Jan Feb Apr May Dec - 2016

Jan Feb Jul Sep - 2014

Jan Apr May Jul Aug Sep Oct Nov Dec - 2012

Feb Mar Apr Aug Sep Oct Nov - 2010

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2008

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2006

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2004

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2002

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Dec

- 2022

Apr - 2019

Mar Apr - 2017

Jan Nov Dec - 2015

Jan Jul Aug Sep Oct Dec - 2013

Feb Mar Apr Jun Aug Oct - 2011

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2009

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2007

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2005

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec - 2003

Jan Feb Mar Apr Jun Jul Aug Sep Oct Nov Dec - 2001

Apr May Jun Jul Aug Sep Oct Nov Dec